Externally documenting workflows is an important part of not just complying with governance requirements, but also ensures best practices are used, and aids in explaining data science methods to non experts. Data science machine learning platforms need tight integrations that completely describe what’s going on in your workflow - not just what happens to your model - but capture exactly what goes on during execution. For both compliance and best practices, businesses need to be able to know what each step of the workflow does, where the data come from, how they move through the workflow, and how they are transformed.

The ability to capture full metadata increases best practices and reuse and decreases institutional risk and liability.

Full capture of metadata for external documentation

For many compliance activities, you need to know exactly what libraries were used at execution time and also what “non-controllable actions” such as calling external packages happened so that - if required - further investigative activities can be performed. With so many organizations wanting to best leverage their teams, you may also want to know when standards and best practices are followed and be sure that they are up to date, such as when using shared components or calling shared workflows. Here at KNIME we have now surfaced our metadata for external documentation and processing.

KNIME workflows have always been completely self documenting. The internal representation of a workflow is a special xml format that defines every aspect of a workflow, from the data that go in and out, to the references of the preceding and following nodes as well as the exact settings and transformations that occur within each node. In addition, execution states are stored as well as whether a node is current or deprecated. Anyone who knows KNIME knows the power of a saved workflow. This means all the “metadata” is already there.

In the past, KNIME has used this metadata for a few popular capabilities in KNIME such as identifying shared components, flagging deprecated nodes in a workflow, and providing the workflow difference function to generate an exact list of what is different between two workflows. Now all available metadata can be extracted and used.

In this blog article, we want to show how to use the Workflow Summary function - specifically, how you can extract your workflow’s metadata - then show two cases demonstrating how such metadata can be analyzed.

How to Use the Workflow Summary

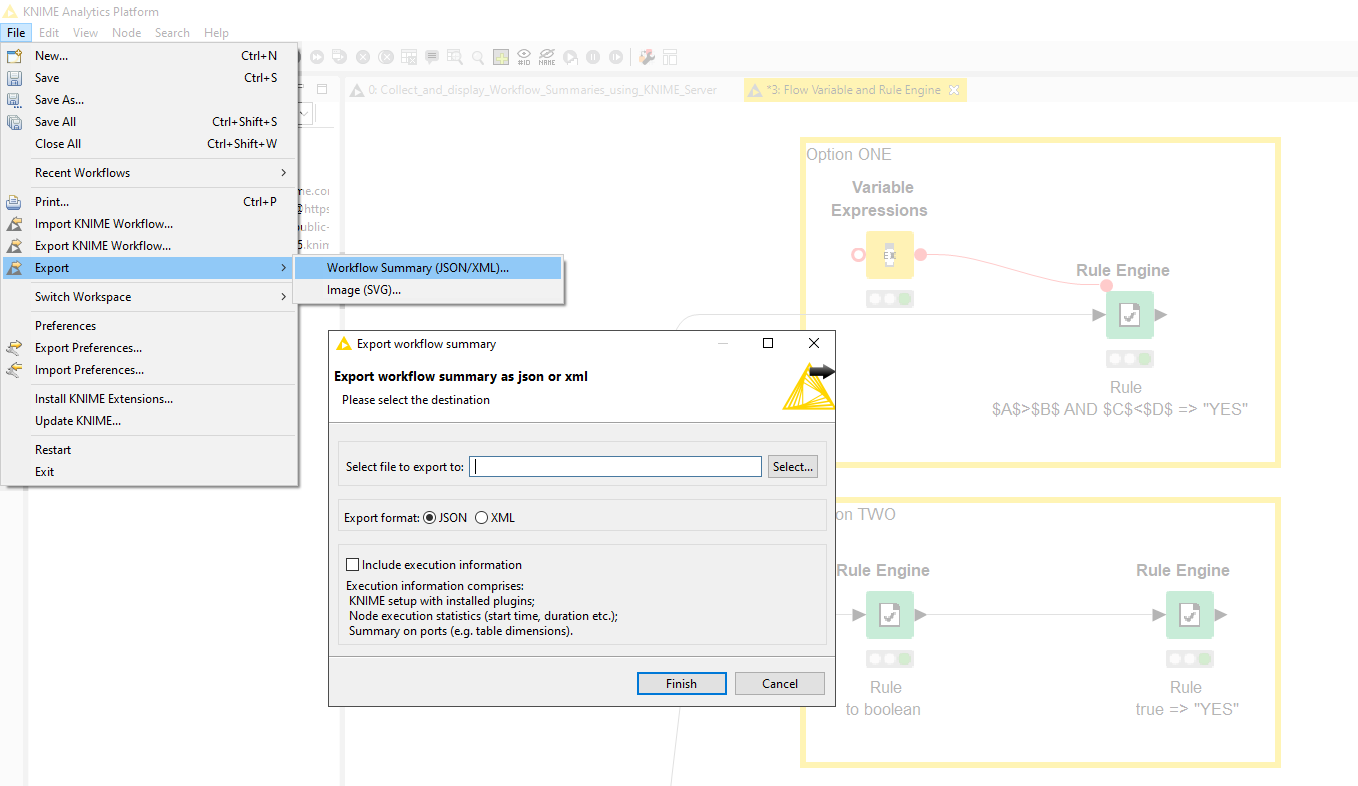

With KNIME Analytics Platform V 4.2, all metadata information can now be extracted into an external XML or JSON file, including execution and environment information if that option is chosen. It can be done manually from within a workflow by going to the File-> Export -> Export Workflow Summary option.

You also have the option to save all execution and library information. The XML file is then available for storing or further processing.

Fig. 1. Selecting “Workflow Summary” from the File -> Export menu in KNIME and saving the file as a JSON or XML file to the location of your choosing.

Automating Metadata Mapping

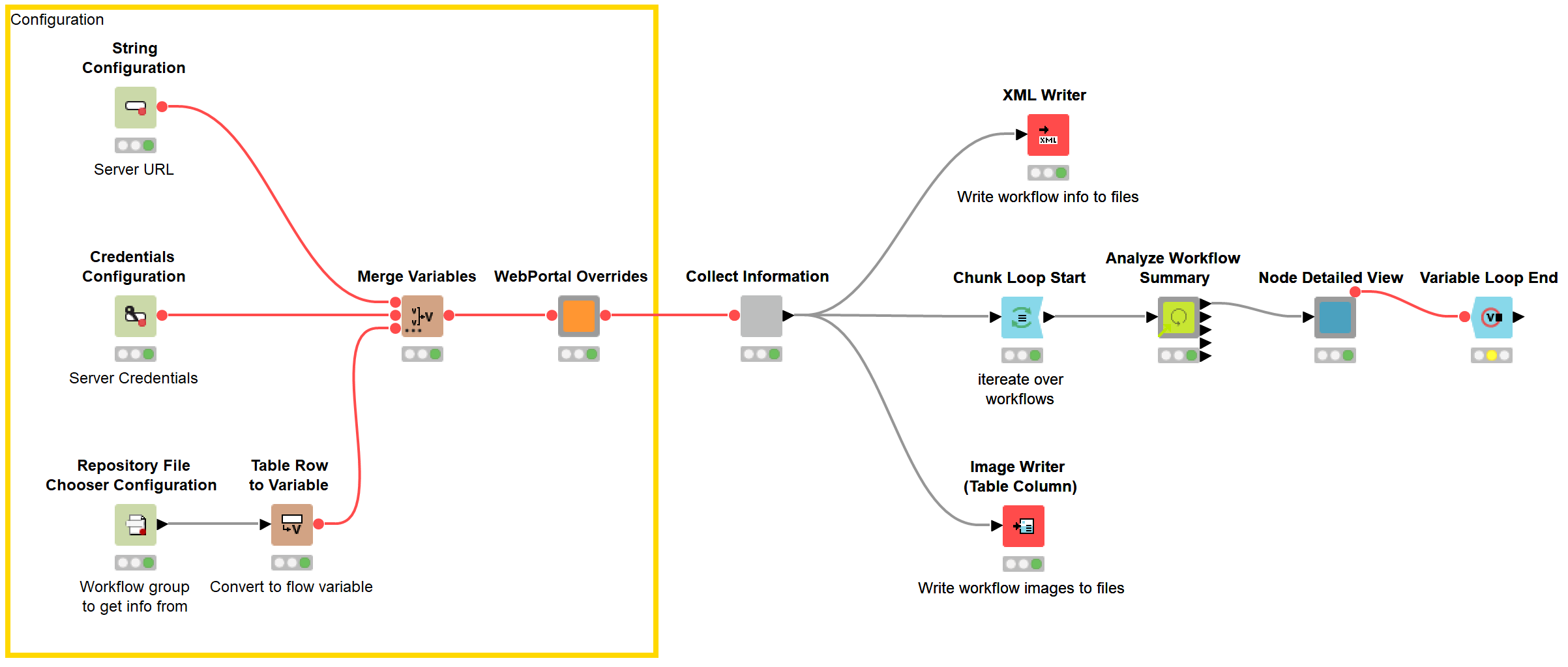

While the manual extraction capability is available to all KNIME users, the power for an organization comes when the workflow extraction capability is automated with KNIME Server. In this way any number of workflows and components available through the KNIME Server can have their XML Metadata (as well as an SVG image of the workflow) automatically extracted and archived.

Creating Reports

Collect Workflow Information from KNIME Server REST API

An example workflow, Collect Workflow Information from KNIME Server REST API, is provided on the KNIME Hub that uses KNIME’s Rest API functionality to automatically extract and save all metadata information, as well as an image of the workflow, for each workflow of interest. Once the metadata has been extracted, we can use KNIME to extract and interpret the metadata information in a variety of forms.

Fig. 2. Workflow for retrieving workflow summaries and images for reporting. When connected to a KNIME Server installation, this workflow can be configured to trigger regular reports.

Workflow Summary Report

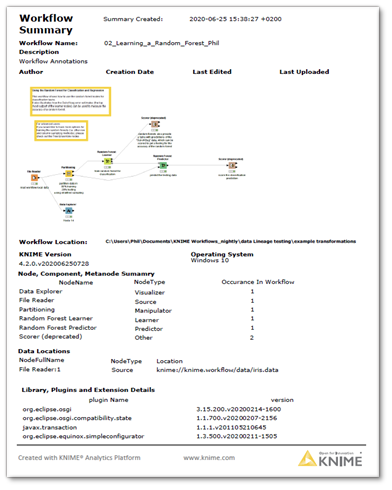

When satisfying compliance requirements, it is important to create not only an XML or JSON that contains all the metadata but reports that interpret that information. In this example, a workflow creates a summary report based on the extracted and stored XML information of the workflow selected for investigation.

This report shows a range of metadata available from the previously extracted XML. Highlights include a summary of workflow information. If the workflow has been stored on the KNIME Server, this also includes author, creation date and last edited date. In addition, all workflow annotations are included along with an SVG image of the workflow itself. Information about the KNIME version and operating system as well as a summary of the nodes is provided. A detailed summary of libraries, plugins and extensions is also provided.

Fig. 3: Workflow summary report created from metadata.

Generate Workflow Metadata Overview

The example workflow, Generate Workflow Metadata Overview Report, available on the KNIME Hub, uses a KNIME Verified Component - the Analyze Workflow Summary component - to interpret the XML. This workflow can be extended to include a variety of other metadata information stored in the XML.

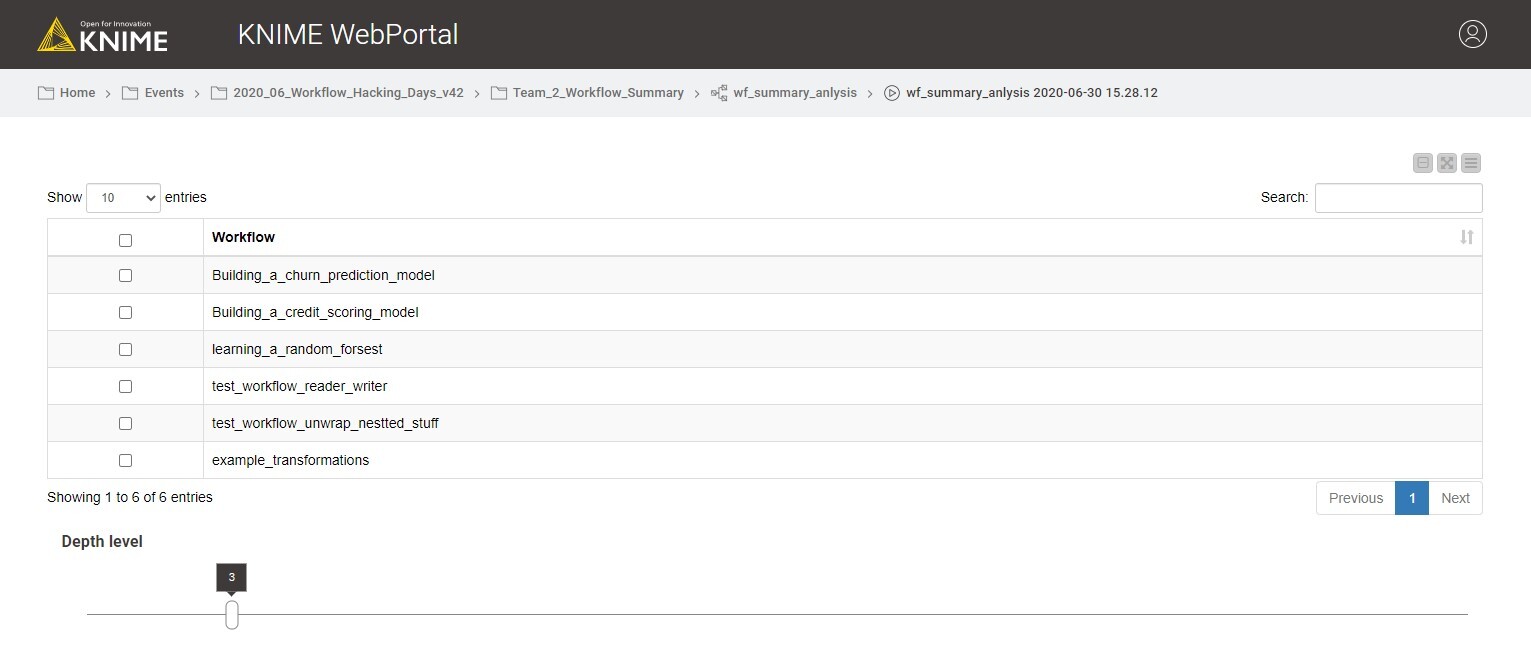

Interactive exploration and reporting

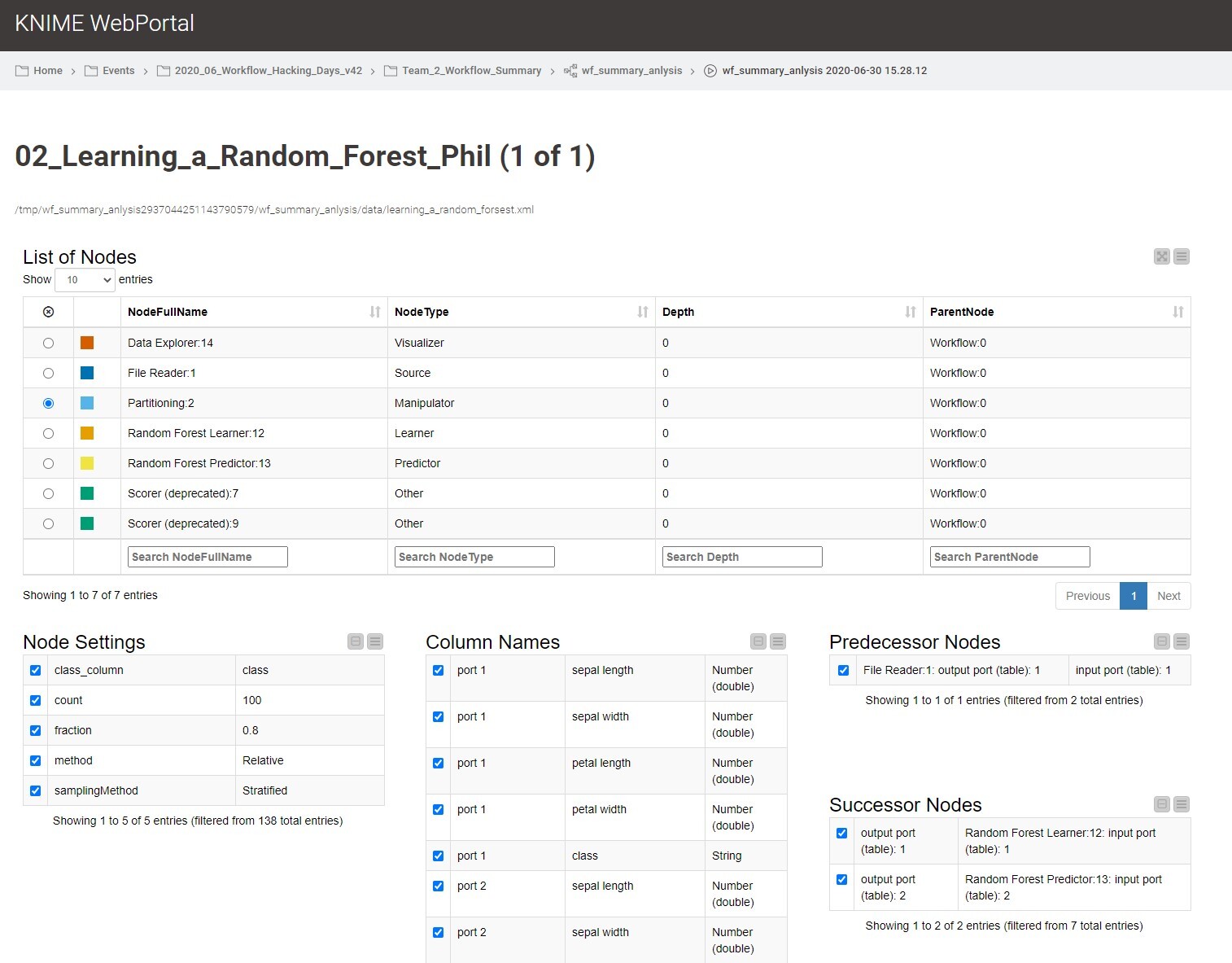

But we can do much more than create reports. In the next example, a workflow creates an interactive WebPortal page, where you select the workflow XML that you wish to investigate. The WebPortal page presents you with a summary of that workflow’s nodes. You can then select a node and see that node’s settings, the columns provided to that node as well as get an indication of any predecessor or successor nodes.

Fig. 4. Selecting the workflow on the WebPortal for which you want a report

This Interactive Workflow Metadata Exploration workflow is also available for you to download from the KNIME Hub and try out for yourself. It uses the Analyze Workflow Summary component to interpret and present the XML. Note that although the example works on a local installation of KNIME Analytics Platform, its full interactive capabilities are best used as a KNIME WebPortal application on KNIME Server.

Fig. 5. Selecting the nodes for further investigation.

These examples are KNIME workflows that can be modified and extended to exploit the available metadata.

Satisfying Business Requirements

This short blog has introduced the concept of a data science machine learning platform integration that allows full capturing of a company’s workflow metadata. We have given examples of how to externally store and then exploit that metadata with additional sample KNIME workflows, which can be downloaded from the KNIME Hub for you to use yourself.

The metadata available is extremely complete and robust. It can be used to address such topics as data lineage, GDPR compliance and model explainability, in that reports of exactly what fields were used in a model can be explicitly defined. The examples here can be expanded to answer such questions as:

- Do all production workflows use the required GDPR components?

- Are security protocols for accessing data being followed?

- Are best practices (components & workflows) being used?

- Are the latest versions of shared components being used?

- Where is code-first (Python, R, etc.) involved where “manual coding” can occur?

- What workflows are not using the most current version of nodes?

- What nodes, components, and data sources are most used across the organization?

- What is the documented difference between versions of a workflow

Even as this article goes public, there are additional examples being created by KNIME and it is expected that over time the KNIME Community will publish their own workflows on the KNIME Hub that exploit the metadata available. Keep up to date by using the keyword "metadata" on the KNIME Hub to see what other workflow examples are available!