KNIME Team plan

Schedule your KNIME workflows to run anytime, collaborate in a private space with your team, and deploy data apps with flexible pay as you go pricing.

Plans start at €99 per month, including 3 seats and 1,000 workflow execution credits.

KNIME is consistently rated among the top analytics tools for enterprises and small teams.

Start automating your analyses and share them as interactive data apps with the KNIME Team plan

- Collaborate on workflows in a private online space with your team

- Put your data work on autopilot by setting up workflow automations on a schedule or ad hoc

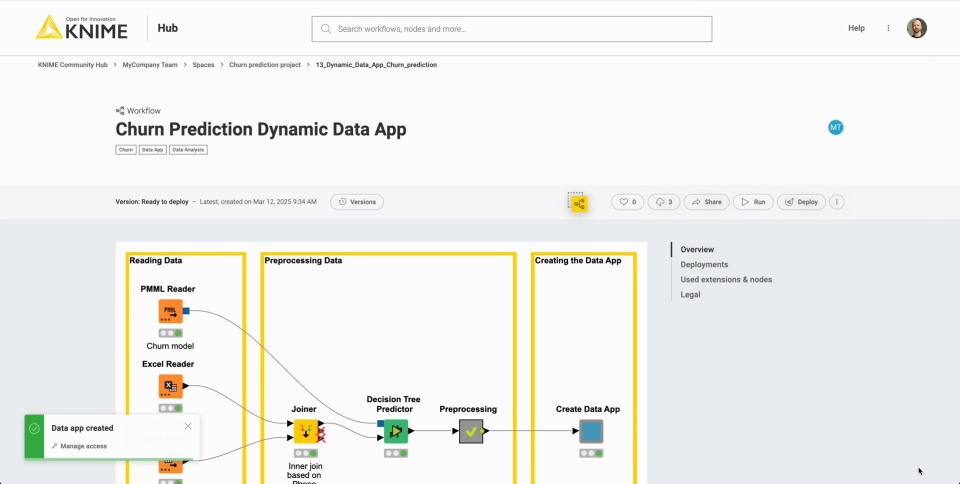

- Distribute your workflows as data apps to help end users access insights without installing or understanding KNIME Analytics Platform

- Benefit from version control to track changes and maintain workflow integrity

- Get started with a 1 month free trial and 1,000 execution credits

- Benefit from secure, centralized, and encrypted handling of credentials for smoother automation and deployment

Run KNIME workflows of any complexity ad-hoc or on a schedule and deliver them as data apps to anyone in the organization with KNIME’s SaaS offering. No complex IT setup – get started in a few simple steps.

Subscribe, then create a space.

Run your first workflow

Automate your workflow to run on a schedule

Share your workflow as an interactive data app

Start automating workflows and sharing insights via data apps with 1 free month, 1000 execution credits included.

• Common use cases: Automated data integration, automated data cleaning, automated reporting, and more

• Ideal for data from cloud-based storage like Google Cloud, Snowflake, Amazon S3, Azure, and more

Preparation of reports previously consumed hundreds of hours each quarter. Now, the time required to prepare a report with the combination of KNIME and Power BI—which once took an entire workday—has been reduced to just a fraction of that time. This shift has freed up time for more productive activities.

Frequently asked questions

KNIME is an open-source platform for creating visual workflows, enabling data manipulation, analysis, machine learning and more.

Learn how to build workflows in our open-source KNIME Analytics Platform – See how it works, or download KNIME Analytics Platform.

You can then use to automate with the Team plan.

Team plan allows you to create a team on KNIME Hub to collaborate on a project with your colleagues in private spaces, accessible only to the team members. Additionally, you can add execution capabilities to your team enabling workflow execution and automation.

The total amount you pay is the monthly cost of the team subscription and the cost for execution, based on consumption. You will pay for your team subscription once every month, at the beginning of your billing cycle. On top of the billed subscription you will be charged for execution only based on consumption in the past billing cycle. You will be charged when the execution context is up and running. We offer various execution context sizes, each with different execution power, which will consume different credit amounts per minute. Price per credit is €0.025, billed per started minute.

Available configurations, tailored to different processing needs, include: Small (2 vCores - 8 GB RAM) consuming 2 credits per minute, Medium (4 vCores - 16 GB RAM) consuming 4 credits per minute, and Large (8 vCores - 32 GB RAM) consuming 8 credits per minute.

Yes, you can run multiple workflows in parallel on the same execution context, it does not incur additional charges. Execution performance may vary depending on the execution power of the execution context and the amount and type of workflows executing in parallel.

Total execution consumption is based on the time the execution context is active. Its active time depends on multiple factors, including workflow execution time and minimal additional time for context stopping. The execution context starts automatically when a workflow is executed, either ad-hoc or through a schedule. Once all running workflows are finished, the context stops automatically.

You can run multiple workflows simultaneously, paying only for the time the execution context is up running. Also, we provide different execution context configurations to optimize both costs and performance.

Yes, maximum execution time can be configured for each workflow schedule, allowing flexibility in managing execution duration. Find more about how to schedule the execution of your workflow and set up its maximum execution time in the KNIME Community Hub Guide.

Once you upload your workflow you will be able to use an execution context to run your workflows. Workflows can be executed ad-hoc, as a Data App, or scheduled for automatic execution at specified intervals.

All the extensions developed by KNIME are available to run workflows on KNIME Community Hub. Find more information in the KNIME Community Hub Guide.

Disk space stores team workflows and data files, with a recommended starting point of 30GB, expandable as needed (at extra cost).

You can download and upload your data directly from and to the team’s spaces. Additionally, you can connect to any data sources accessible by the KNIME Community Hub, for example via our Google or Azure cloud connector nodes.

Yes, the Python integration is available. The only Python environment available by default on the KNIME Community Hub is the bundled Python environment of the Python integration. If you need a custom environment, use the Conda Environment Propagation node to make sure it gets set up when your workflow runs. Please note that Python environments configured by the Conda Environment Propagation node will be created each time the execution context starts up.