Data science is being used successfully in a German automotive company, where machine learning solutions are applied to enable data-driven planning and automated data processing for time and resource savings so that employees can concentrate on activities with greater added value.

We are very glad we report on our experience of applying data science and machine learning in the automotive industry. We work in an automotive company that produces car lock systems. Its headquarters are near to Düsseldorf, Germany; a new plant was established in the Czech Republic in 1992, including its own services, finance, IT, research and development, sales etc.

We started building data science within our roles in the IT team in 2018. Our first tasks focused on applying machine learning. Let’s look at the use cases and techniques.

Key takeaways

- Learn how machine learning is used in an automotive company to predict operator capacity in Production and to predict VAT codes in Finance.

- Learn how automation of data processing is used to save automotive company resources and enable more focus on activities with greater added value

Production: Predict operator capacity with machine learning

The former CEO of the Czech plant needed to solve capacity issues. The company had a problem with capacity planning: Sometimes there weren’t enough operators and sometimes managers realized they had too many in the headcount and had to send them home.

This was because planning of the approx. 1500 operators at the Czech plant was done manually, based on manager experience. The CEO decided he needed to be able to make reasonable decisions about operator capacity based on data.

ML model predicts operator capacity with 96% accuracy

After a few meetings with people from Production, we identified the features that influence the number of operators, for instance net sales, down-times, sickness, employee turnover rate. We took those features and numbers of operators for each month going back over a three-year period.

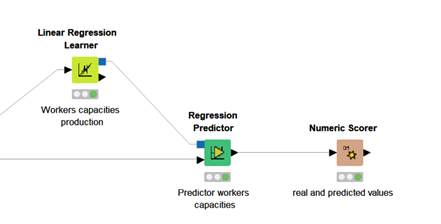

We used KNIME Analytics Platform for our data science process and applied multiple linear regression to learn a new model to predict the number of operators in production. The predictor tested our model and we got a result of 92% of R2, which indicates high accuracy.

To validate the predictions, we already had four months with the real numbers of operators in the new year plus planned values of net sales and a number of other features. We applied our learned model and were able to report an accuracy of over 96%!

This data science solution now enables the company to plan the operator capacity effectively. Planning and decision making are based on data and ensure there will be no big gaps between the actual and required number of operators.

This data science solution now enables the company to plan the operator capacity effectively. Planning and decision making are based on data and ensure there will be no big gaps between the actual and required number of operators.

Finance: Predict VAT codes and avoid unnecessary costs

In the Automotive industry, orders are generated once for each product, which can then be manufactured over several years. This one-time order generation is based on the parameters of the country of produced parts, country of parts received, countries of different stocks and logistic centers on its logistic delivery way, and much more.

A VAT code is assigned to the order automatically. This code indicates the value of the VAT in %. However, over time, parameters (countries and others) can change, but the VAT code in the system is not updated accordingly.

This means that in some cases VAT was not correct and the amount of VAT paid to institutions were sometimes incorrect too. Ultimately causing penalties to be paid. Let’s now look at how applying data science can make this process more accurate.

ML model predicts VAT codes with 90% accuracy

Before our data science solution, one accountant spent three days each month to check the VAT codes manually and correct them if necessary. To save this accountant’s time and also avoid paying penalties we applied a machine learning model to predict the VAT code every month based on learned data from one year ago (there were hundred thousand observations). Our solution was applied to not only predict VAT codes but also to compare the real VAT code with the predicted one.

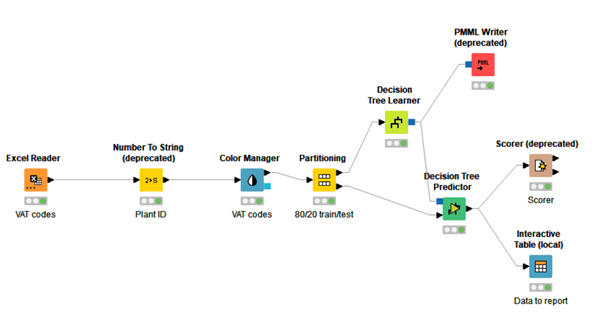

We decided to model our machine learning algorithm using the decision tree for classification problems.

The accuracy of this model was initially around 80%. In the meantime, we discovered more than thousand observations in the historical data with incorrect VAT codes that had never been corrected. We corrected these VAT codes manually in the input table and ultimately achieved an accuracy of 90%!

Now, this workflow is run every month with new data to predict VAT codes based on the orders parameters and compare the real VAT code set up in the system with the predicted one and correct if there is a difference.

This data science solution enables the company to save time and avoid problems with the finance institutions and paying penalties for incorrect amounts of VAT paid.

This data science solution enables the company to save time and avoid problems with the finance institutions and paying penalties for incorrect amounts of VAT paid.

Automate Data Processing to Save Resources

KNIME Analytics Platform is an amazing tool to analyze data and create machine learning techniques to predict circumstances, categorize data, visualize results and much more. It is also very useful for automation in data analysis!

Our experience shows that an automatic workflow for data manipulation can save considerable resources of companies in the automotive industry, which they can then use in activities with greater added value.

Example 1: Automatic "Covid reporting" for state aid models and HR

The COVID-19 pandemic has brought new challenges, and data processing was no exception. Spring 2020 meant for most companies a change in the principle of work, reduced working hours, and state support. It was the area of state aid that brought the need to report new types of working units in order to calculate the state support for industry.

The HR department prepared special tables where managers had to report the presence of employees under various state aid models on a weekly basis. The tables were prepared in a hurry: no one was considering standardization. As a result, in the first week, 40 tables with many thousands of rows met at the HR department.

Two colleagues processed these tables for 8 hours. Standardization, merging, corrections, and modifications of tables into the final report cost the company 16 hours of qualified work carried out by HR experts.

Due to the fact that the whole process of "Covid reporting" was subsequently going to take several weeks, the HR department asked IT specialists for help. After a short analysis, a solution was designed using KNIME Analytics Platform.

Report processing time cut down from 16 hours to 45 minutes

It took only six hours to create a workflow prototype that standardized and merged data from Excel tables, handled data exceptions and errors, and linked them to two control tables from the attendance database. A further two hours were needed to debug and validate the results. The final workflow speed test showed a total report processing time of approx. 45 minutes.

The workflow was then used for six months, 4-6 times per month. With the increase in experience, we managed to optimize the workflow and reduced the run-time of our automatic workflow down to 20 minutes despite a simultaneous weekly increase in the volume of processed data.

The strength of KNIME Analytics Platform in the field of data processing automation helped the company save 40-50 man-days in 6 months. This is a fantastic result.

The strength of KNIME Analytics Platform in the field of data processing automation helped the company save 40-50 man-days in 6 months. This is a fantastic result.

Example 2: Data processing from SAP

In the automotive field, SAP is a commonly used enterprise ERP system. It has extensive reporting and automation capabilities. However, this does not mean that data from SAP is not regularly downloaded, modified, combined with other data (from suppliers, customers, authorities, etc.) and evaluated.

This is usually done using MS Excel. The employee runs a report in SAP, filters the required data, and saves it in an MS Excel Sheet. This is where manual data operations and the required analyses are performed. The possibility of a direct data upload from SAP ERP is very limited.

KNIME Analytics Platform helps here too. The platform offers several ways to use data from SAP ERP in automation workflows.

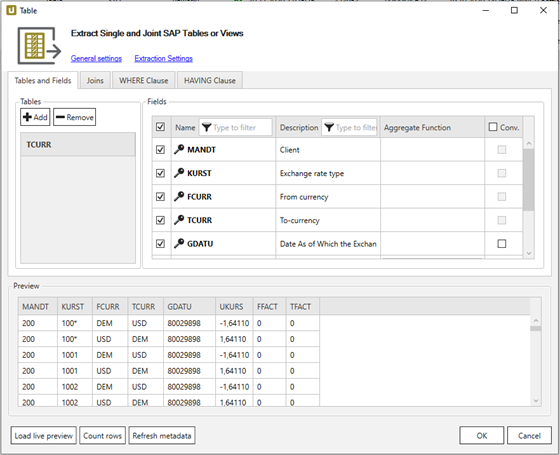

Option 1: Theobald Xtract Universal

As of KNIME 4.2, it is possible to use the SAP Reader (Theobald) node, which works with a great solution from Theobald Software. In the Xtract Universal console, you prepare a join to the required tables, and then use that join in the SAP Reader (Theobald) node. The node returns the required data and you can work with it within your automation workflows.

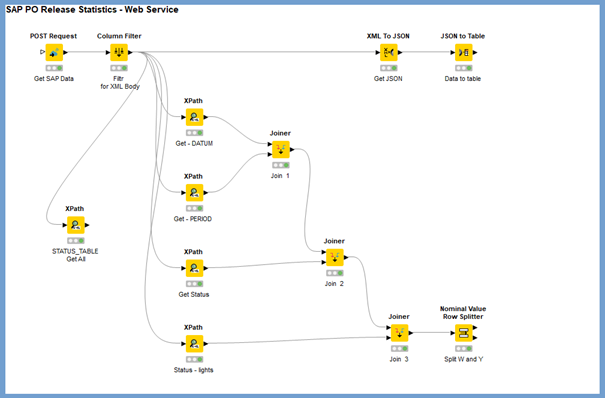

Option 2: Extract data from SAP using a Web Service

This option is based on a SAP Web Service. In principle, you prepare an RFC function in SAP that you use to create a Web Service Provider. Then you use the POST Request node in the KNIME workflow, setting the communication parameters for the SAP Web Service. A properly configured node calls SAP, requests data, and returns it to the KNIME workflow in XML format. We then modify the XML message into a table format using the XPath node. We can also perform XML conversion to JSON and then further process it into a table.

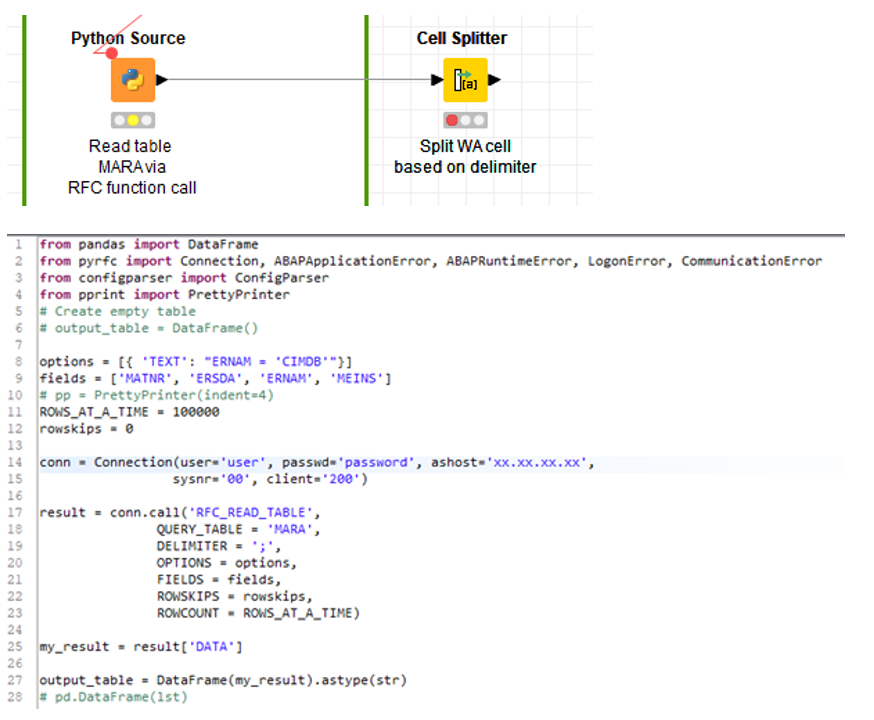

Option 3: Extract data from SAP using RFC functions called by Python Source node

This solution requires a local installation of the SAP NW SDK and SAP Logon, as well as the installation of the KNIME Python Integration extension. The effort required to install these tools really pays off. Using Python scripts, you can use any RFC function from SAP and use it to retrieve data from SAP for your automation workflows.

Machine Learning and Automated Data Processing for Greater Added Value

Machine learning and automation of data processing gives companies in the automotive industry not only a competitive advantage and significant cost savings but also allows people to focus on interesting tasks with greater added value.

Machine learning solutions as well as also repetitive tasks, such as downloading, merging, standardizing, classifying, evaluating, and correcting data, can be handled efficiently by automated tools such as KNIME Analytics Platform.

Download the Example workflows from the KNIME Hub

You can try out two of the workflows we created yourself. They are publicly available on the KNIME Hub for you to download, try out, and adapt to your own requirements. Note that sensitive information is replaced in the workflows with "xxx" and we provide explanation in the workflow of how to set up the prerequisites.