When adopting machine learning, after the model is trained and validated, businesses often need answers to several questions. How is the model making its predictions? Can we trust it? Is this model making the same decision a human would if given the same information? These questions are not just important for the data scientists who trained the model, but also for the decision makers who ultimately have responsibility for integrating the model’s predictions into their processes.

Additionally, new regulations like the European Union’s Artificial Intelligence Act are being drafted. These standards aim to make organizations accountable for AI applications that negatively affect society and individuals. There is no denying that at the current moment, AI and machine learning are impacting many lives. Approvals for bank loans or making decisions based on targeted social media campaigns are a few examples of how AI could improve the user experience — assuming we properly test the models with XAI and domain expertise.

When training simple models (like, for example, a logistic regression model), answering such questions can be trivial. But when a more performant model is necessary, like with a neural network, XAI techniques can give approximate answers for both the whole model and single predictions.

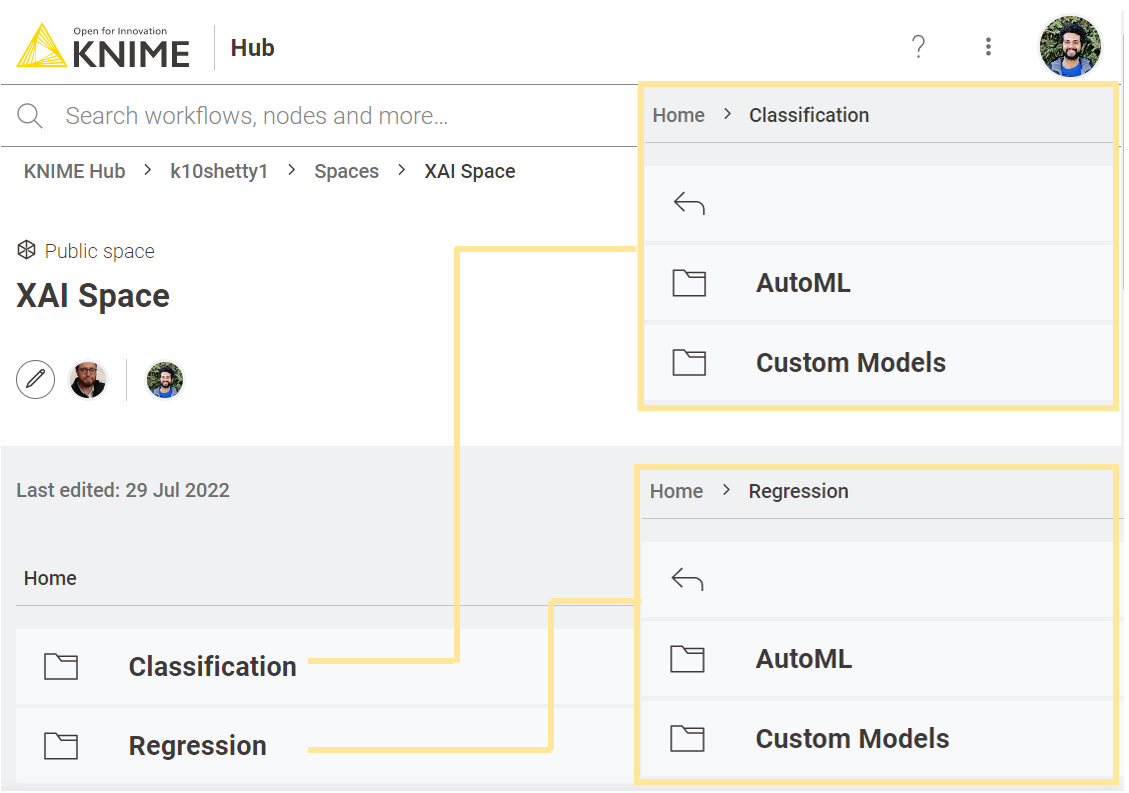

KNIME can provide you with no-code XAI techniques to explain your machine learning model. We have released an XAI space on the KNIME Hub dedicated to example workflows with all the available XAI techniques for both ML regression and classification tasks.

Explainable AI nodes and components

These techniques for XAI can be easily dragged and dropped in KNIME Analytics Platform via the following features:

- KNIME Machine Learning Interpretability Extension offers a set of nodes that work on their own or in combination with components to perform customly selected XAI techniques. We cover here: LIME, Partial Dependence, SHAP, and Shapley Values.

- Model Interpretability Verified Components offers a set of components which can either work with the above extension or be applied individually. These components often accept any input model that’s trained in KNIME and captured with Integrated Deployment. The latter also offer built-in visualization to explore the XAI results. Read an overview at “How Banks Can Use Explainable AI (XAI) For More Transparent Credit Scoring.”

In the new space, we showcase how to use the various KNIME components and nodes designed for model interpretability. You can find examples in this XAI Space, divided into two primary groups based on the ML task:

- Classification - supervised ML algorithms with a categorical (string) target value

- Regression - supervised ML algorithms with a numerical or continuous target value

Then, based on the type of training, there are two further subcategories:

- Custom Models - A ML model is used with a Predictor and a Learner node. The Predictor nodes in some examples are captured with Integrated Deployment and transferred to the XAI component.

- AutoML - AutoML Classification and Regression components are used, which select the best model that fits the data.

You can easily see how your model generates predictions, as well as what features are accountable, by adopting these components and nodes in combination with some nice visualization. Furthermore, you can examine how the prediction changes as a result of modifications to any of your input features. On top of that, we offer fairness metrics to audit the model for responsible AI principles.

To give you a taste of what topics are covered in this XAI space, let’s see three examples.

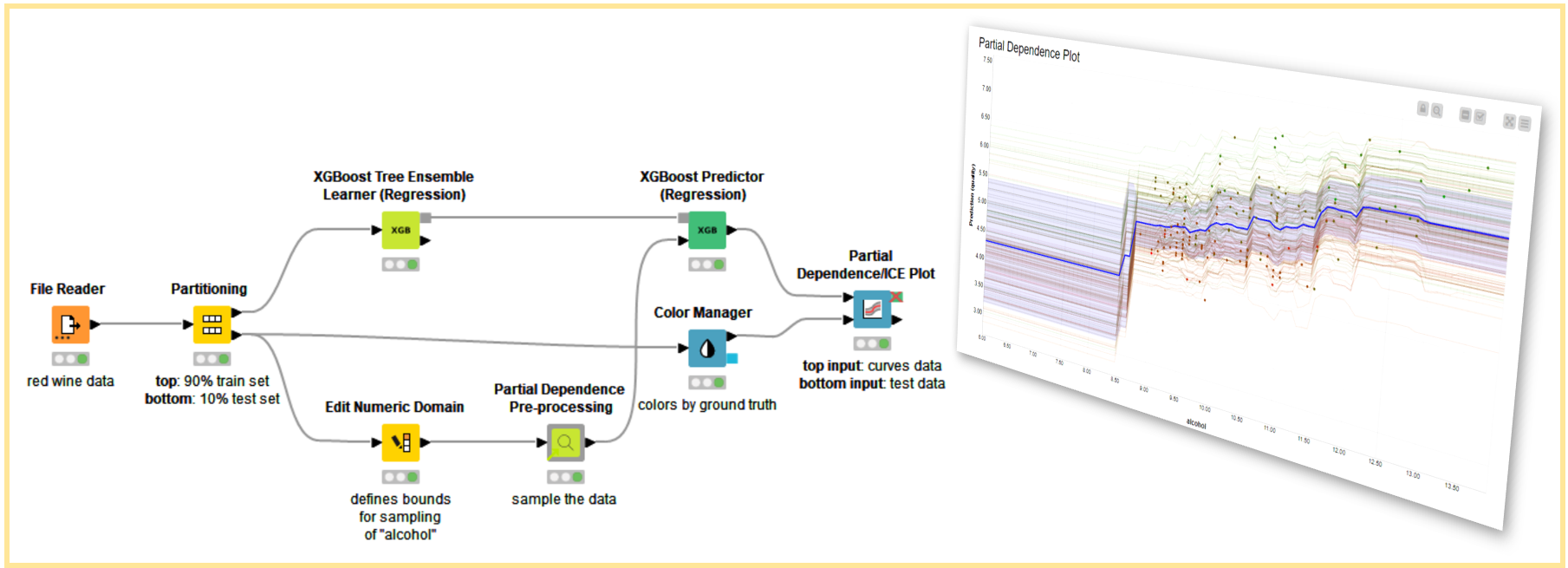

Global and local ML explanation techniques

We collected a few examples for both local and global XAI techniques. While local methods describe how an individual prediction is made, global methods reflect the average behavior of the whole model. For example, you can compute partial dependence (PDP) and individual conditional expectation (ICE) curves for a ML regression model that’s custom-trained. PDP is global, while ICE is local. More examples are available.

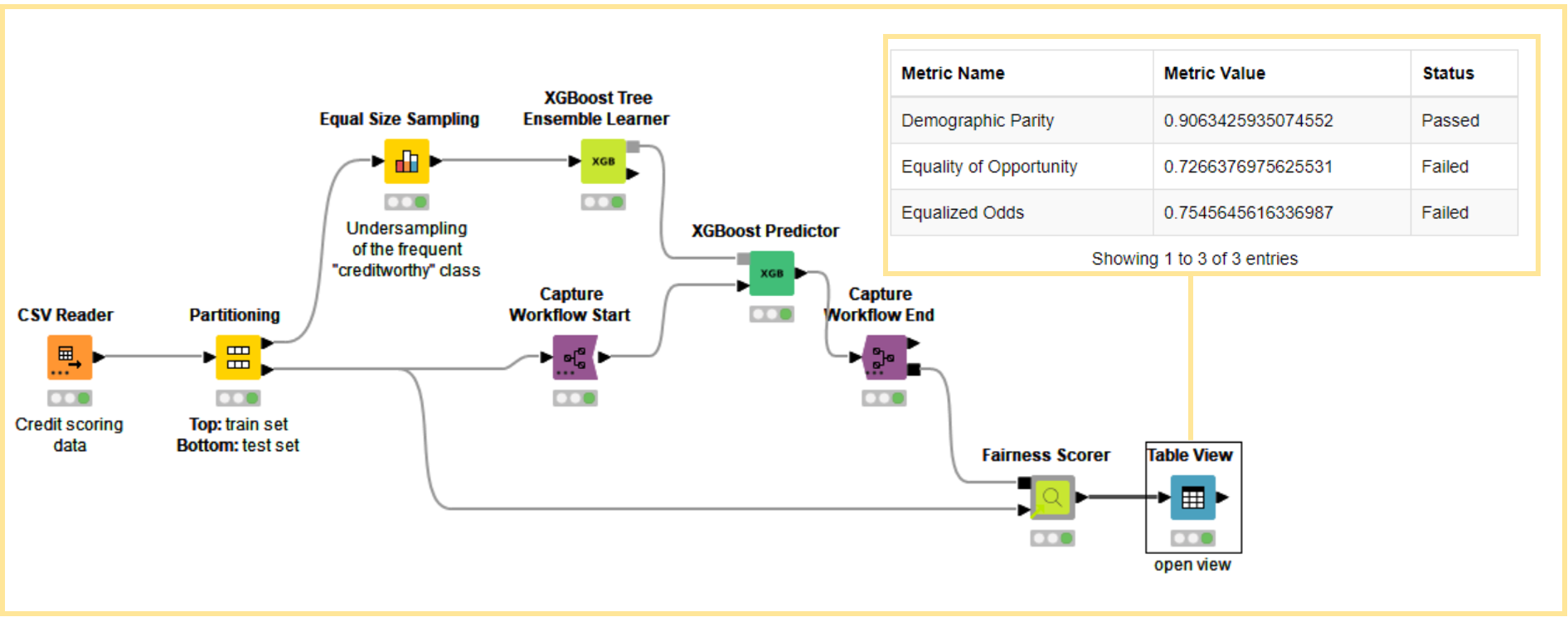

Responsible AI: Measuring fairness metrics

Training and deploying a ML model can take very few clicks, in theory. To make sure that the final model is fair to everyone affected by its predictions, you can adopt Responsible AI techniques. For example, we offer a component to audit any classification model and measure fairness via popular metrics of this field.

Data Apps for business users ready for deployment

Not all experts need to adopt KNIME Analytics Platform to access these XAI techniques. Certain components in the XAI space can be deployed on the WebPortal of KNIME Server to offer data apps for business users. These XAI data apps can be shared via a link and accessed via login on any web browser. Business users can then interact via charts and buttons to understand the ML model in order to build trust. In the figure below, the animation shows how the Model Simulation View works both locally on KNIME Analytics Platform and online on KNIME WebPortal. Read more at “Deliver Data Apps with KNIME: Build a UX for Your Data Science.”

To learn more on the individual techniques, read these blog posts:

- ”XAI – Explain Single Predictions with a Local Explanation View Component,” about the “Local Explanation View” component

- ”Debug and Inspect your Black Box Model with XAI View,” about the “XAI View” component

- ”Understand Your ML Model with Global Feature Importance,” about the “Global Feature Importance” component

Future posts will cover more techniques in detail!

If you trained a model in Python and you want to explain it in KNIME, we recommend “Codeless Counterfactual Explanations for Codeless Deep Learning” on KNIME Blog.

If you're new to XAI, consider the LinkedIn Learning course “Machine learning and AI foundations: Producing Explainable AI (XAI) and Interpretable Machine Learning Solutions,” by Keith McCornick. More details at “Learn XAI based on Latest KNIME Verified XAI Components” on the KNIME Blog.