The main barrier to deploying GenAI applications in your company usually isn’t lack of use cases. Most of us can come up with a list of GenAI use cases longer than we could execute on. In most cases the main barrier is our inability to manage the risk of hallucination, bias, or inaccuracy with sufficient confidence. We need reliable AI, but how do we get there?

With KNIME’s new Giskard nodes, data workers have a simple way of checking any machine learning model, LLM, or RAG system for evidence of potential bias or hallucination.

Combined with KNIME’s visual step-by-step approach to data workflows, its AI gateway, and comprehensive governance features, it’s easier than ever to build and test LLMs.

Finally, your team and organization can have the confidence to scale GenAI use cases while managing the risk.

What is Giskard and how does it help?

Giskard is an open source Python library for testing, monitoring, and evaluating machine learning and AI models.

It offers a way to ensure machine learning solutions and GenAI applications relying on LLMs and RAG systems are reliable, fair, and robust.

Specifically, Giskard provides businesses with tools to automatically identify vulnerabilities, risks and biases in analytics applications. Vulnerabilities can harm customer trust, brand reputation, and lead to legal and regulatory disputes. Indeed, regulatory frameworks in the EU and USA now require stricter transparency and trustworthiness from AI providers, pushing businesses to enhance model risk management practices.

Giskard’s testing framework ensures scalable checks and deep reviews of LLM systems, while KNIME’s visual workflow builder allows you to make work transparent and auditable. Combined, these features support you in implementing strong AI governance practices before deployment.

How can you use Giskard with KNIME?

KNIME now has a collection of free-to-use Giskard nodes that each help you detect vulnerabilities in your models, such as potential hallucination and bias. Without the need of writing a single line of code.

You can include the Giskard ML, LLM, and RAGET nodes in your KNIME workflows to evaluate models and analyze the results as part of continuous deployment of data science processes.

When using one of the Giskard nodes, you will be able to see a report in the node monitor view highlighting potential vulnerabilities of your ML model, large language model, or effectiveness of RAG systems. Depending on whether you’re evaluating traditional ML models or LLMs, detectable vulnerabilities may range (partial list) from spurious correlation, performance bias, and data leakage, to hallucinations, harmful content and robustness level, respectively.

The different Giskard nodes allow evaluation of different use-cases and applications. The Giskard ML Scanner can be used for classification and regression tasks, the Giskard LLM Scanner for text generation use-cases, and the RAGET Test Set Generator and RAGET Evaluator nodes for RAG systems.

After evaluating the results in Giskard you can then make a decision on whether your model, input features, prompt, or RAG components need fine-tuning to reach a set of results within a desired accuracy or bias range.

📌Traditional ML models: Take a look at these example workflows to see first-hand how the Giskard ML Scanner node can be used.

📌LLMs and RAG: Take a look at these example workflows to see first-hand the Giskard LLM Scanner and Giskard RAGET nodes in action.

What new Giskard nodes are available?

Let’s take a look at the different open source Giskard nodes offered in KNIME so you can get a feel of what they offer and which ones are right for your use case.

- Giskard ML Scanner: For traditional machine learning models

- Giskard LLM Scanner: For evaluating large language models

- Giskard RAGET (Testset Generator and Evaluator): For evaluating RAG systems

Giskard ML Scanner

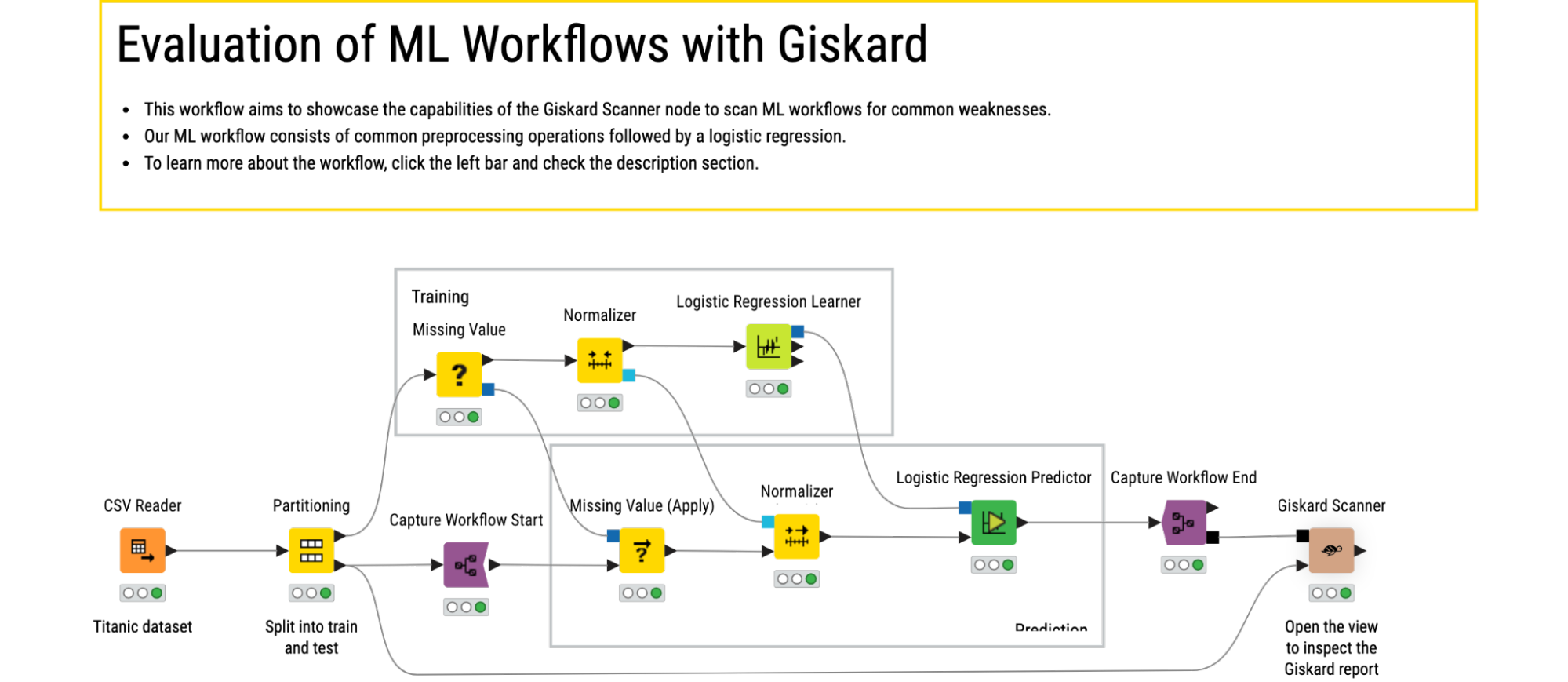

The Giskard ML Scanner node allows teams to evaluate ML pipelines and models for both classification and regression tasks.

The Giskard ML Scanner node checks for the following vulnerabilities:

| Performance bias: | The workflow performs worse for subpopulations of the data. |

| Robustness: | The workflow is sensitive to small perturbations in the input data. |

| Overconfidence: | The workflow makes confident but incorrect predictions. |

| Underconfidence: | The predictions of the workflow are not decisive even for correct predictions. |

| Unethical behaviour: | The predictions are sensitive to perturbations of gender, religion or ethnicity. |

| Data leakage: | Information from the test data leaks into the training data which makes the prediction workflow less generalizable. |

| Stochasticity: | The workflow predictions vary across subsequent invocations on the same data. |

| Spurious correlation: | Some of the features the workflow uses correlate highly with its predictions for subsets of the data. |

Input: The Giskard ML Scanner node takes as inputs the prediction workflow and a table of test data. The prediction workflow can be either read with the Workflow Reader node, or captured and exposed to down-stream operations via the Capture Workflow Start and Capture Workflow End nodes.

Output: The node outputs an evaluation report, available both as a nicely formatted view as well as a table for further processing down-stream.

Giskard LLM Scanner

The Giskard LLM Scanner node allows you to automatically detect risks and vulnerabilities in LLMs and GenAI applications.

The Giskard LLM Scanner node checks for the following vulnerabilities:

| Hallucination and misinformation: | The generation of fabricated information that does not reflect reality. |

| Harmful content: | The generation of malicious texts like hate speech, instructions for illegal activities or promotes violence. |

| Prompt injection: | The manipulation of the input prompt to overcome content filters or alter the workflow's original instructions. |

| Robustness: | High sensitivity to small perturbations in the input that lead to significant divergence in the responses. |

| Output formatting: | The inability to follow the desired output format. |

| Information disclosure: | The exposure of private or sensitive information in responses. |

| Stereotypes and discrimination: | The perpetration of common stereotypes that discriminate against parts of the demographic. |

Input: The node takes as input the generative workflow and an LLM to analyze it. Similar to the Giskard ML Scanner, the generative workflow can be either read with the Workflow Reader node, or captured and exposed to down-stream operations via the Capture Workflow Start and Capture Workflow End nodes.The node also allows you to provide an optional table input port for adding extra information.

Output: The Giskard LLM Scanner outputs an evaluation report, available both as a nicely formatted view and as a table for further processing down-stream.

📌 Check this tutorial and learn how to detect LLM vulnerabilities in GenAI applications using KNIME.

Giskard RAGET

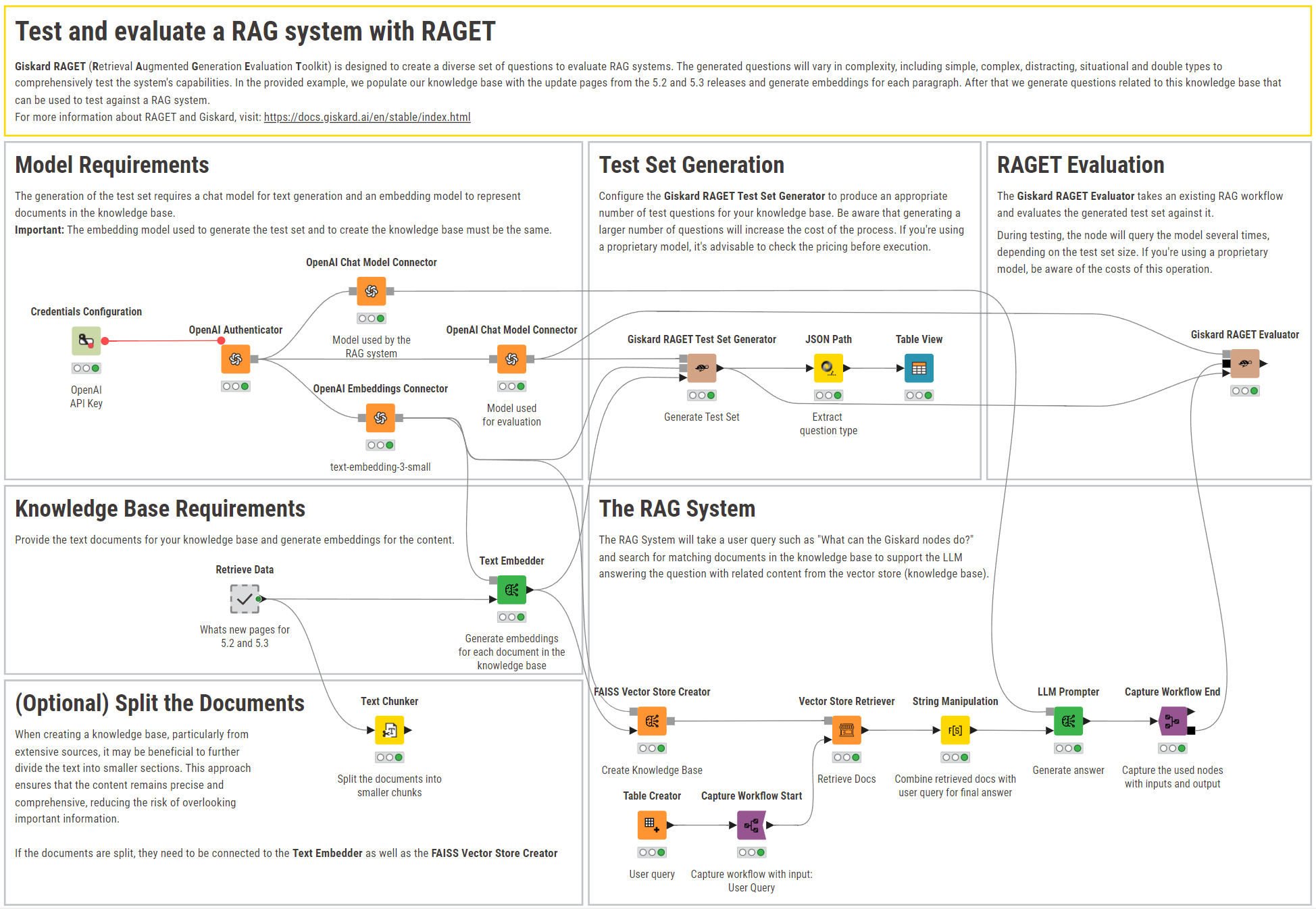

Giskard RAGET (short for Retrieval Augmented Generation Evaluation Toolkit) is a testing and evaluation framework designed to automatically assess RAG systems. RAG systems include five components: the knowledge base, the retriever, the generator, and optionally the rewriter and the router. The Giskard RAGET nodes evaluate all of these five components.

The Giskard RAGET strategy involves creating a test set of multiple types of questions (i.e., queries) and their corresponding reference answers, each designed to target and evaluate specific components of the RAG system.

In the evaluation phase, the correctness of the answer provided by the RAG system is compared to the reference answer using an LLM-as-a-judge approach.

Giskard RAGET Test Set Generator

The Giskard RAGET Test Set Generator node saves you the legwork of creating your own test set of multiple question types for evaluating the quality of your RAG system. The node uses an LLM to generate questions based on the provided knowledge base.

The different question types and their target include:

| Simple questions: | Straight-forward questions about parts of the knowledge base. Target: generator, retriever, router. |

| Complex questions: | Questions with higher complexity that require more cognitive power to answer. Target: generator. |

| Distracting questions: | Contain misleading pieces of information. Target: generator, retriever, rewriter. |

| Situational questions: | Questions that include context information that the system has to take into account in order to produce a good answer. Target: generator. |

| Double questions: | Questions that ask for different pieces of information within one sentence. Target: generator, rewriter. |

Input: The node takes as inputs a chat model to generate questions from the provided knowledge base, an embedding model to embed queries and find related documents, and a table with the knowledge base containing documents and their embedding representations.

Output: The Giskard RAGET Testset Generator node outputs a table containing the generated question, its reference answer (obtained with GPT-4), the reference context to answer the question, and a few metadata.

Giskard RAGET Evaluator

The Giskard RAGET Evaluator node allows you to diagnose and identify the weakest component(s) in your RAG system and make any amendments before you put it into production. It evaluates the correctness of responses produced by the RAG system relative to the reference answers generated with the Giskard RAGET Testset Generator.

Input: The node takes as inputs an LLM to act as a judge, the RAG workflow segment to analyze and a table with the test set of questions.

Output: The Giskard RAGET Evaluator node outputs a report that grades each RAG component. Correctness is used as the evaluation metric, and assigned scores fall between 0 and 100, with 100 being a perfect score. It also gives recommendations on how to improve the overall correctness score, and breaks down correctness by topic for easier debugging.

Put AI into production with confidence

The Giskard nodes can be run in the context of KNIME’s Continuous Deployment of Data Science on KNIME Business Hub, to help organizations scale artificial intelligence and GenAI applications with confidence. They are also available in your KNIME Analytics Platform workflows or if you are automating workflows with a KNIME Community Hub Team plan.

The addition of Giskard nodes to KNIME broadens the KNIME toolset even further for businesses that care about deploying AI solutions securely and responsibility.

Discover more of what KNIME has to offer around GenAI governance and risk management.