Bias in artificial intelligence (AI) is hugely controversial these days. From image classifiers that label people’s faces improperly to hiring bots that discriminate against women when screening candidates for a job, AI seems to inherit the worst of human practices when trying to automatically replicate them.

The risk is that we will use AI to create an army of racist, sexist, foul-mouthed bots that will then come back to haunt us. This is an ethical dilemma. If AI is inherently biased, isn’t it dangerous to rely on it? Will we end up shaping our worst future?

Machines will be Machines

Let me clarify one thing first: AI is just a machine. We might anthropomorphize it, but it remains a machine. The process is not dissimilar from when we play with stones at the lake with our kids, and suddenly, a dull run-of-the-mill stone becomes a cute pet stone.

Even when playing with your kids, we generally do not forget that a pet stone, however cute, is still just a stone. We should do the same with AI: However humanlike its conversation or its look, we should not forget that it still is just a machine.

Some time ago, for example, I worked on a bot project: a teacher bot. The idea was to generate automatic informative answers to inquiries about documentation and features of the open source data science software KNIME Analytics Platform. As in all bot projects, one important issue was the speaking style.

There are many possible speaking or writing styles. In the case of a bot, you might want it to be friendly, but not excessively so — polite and yet sometimes assertive depending on the situation. The blog post “60 Words to Describe Writing or Speaking Styles” lists 60 nuances of different bot speaking styles: from chatty and conversational to lyric and literary, from funny and eloquent to formal and, my favorite of all, incoherent. Which speaking style should my bot adopt?

I went for two possible styles: polite and assertive. Polite to the limit of poetic. Assertive to bordering on impolite. Both are a free text generation problem.

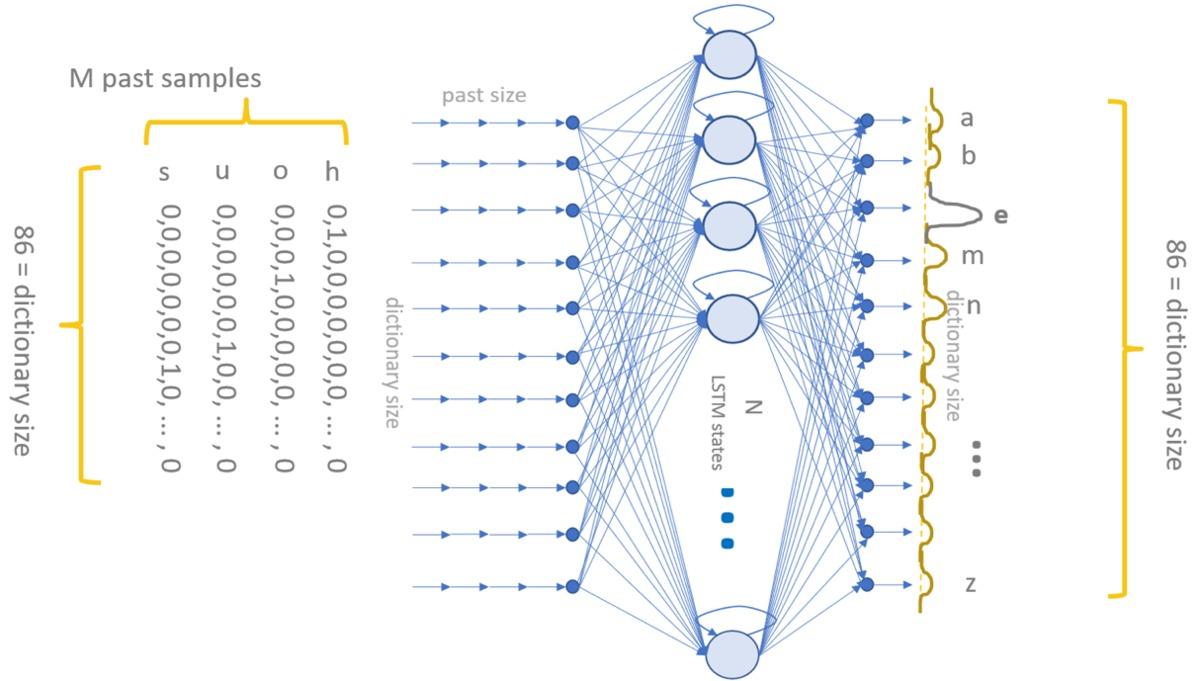

As part of this teacher bot project, a few months ago I implemented a simple deep learning neural network with a hidden layer of long short-term memory (LSTM) units to generate free text.

The network would take a sequence of M characters as input and predict the next most likely character at the output layer. So given the sequence of characters “h-o-u-s” at the input layer, the network would predict “e” as the next most likely character. Trained on a corpus of free sentences, the network learns to produce words and even sentences one character at a time.

I did not build the deep learning network from scratch, but instead (following the current trend of finding existing examples on the internet) searched the KNIME Hub for similar solutions for free text generation. I found one, where a similar network was trained on existing real mountain names to generate fictitious copyright-free mountain-reminiscent candidate names for a line of new products for outdoor clothing. I downloaded the network and customized it for my needs, for example, by transforming the many-to-many into a many-to-one architecture.

The network would be trained on an appropriate set of free texts. During deployment, a trigger sentence of M=100 initial characters would be provided, and the network would then continue by itself to assemble its own free text.

An Example of AI Bias

Just imagine a customer or user has unreasonable yet firmly rooted expectations and demands the impossible. How should I answer? How should the bot answer? The first task was to train the network to be assertive — very assertive to the limit of impolite. Where can I find a set of firm and assertive language to train my network?

I ended up training my deep learning LSTM-based network on a set of rap song texts. I figured that rap songs might contain all the sufficiently assertive texts needed for the task.

What I got was a very foul-mouthed network; so much so that every time I present this case study to an audience, I have to invite all minors to leave the room. You might think that I had created a sexist, racist, disrespectful — i.e., an openly biased — AI system. It seems I did.

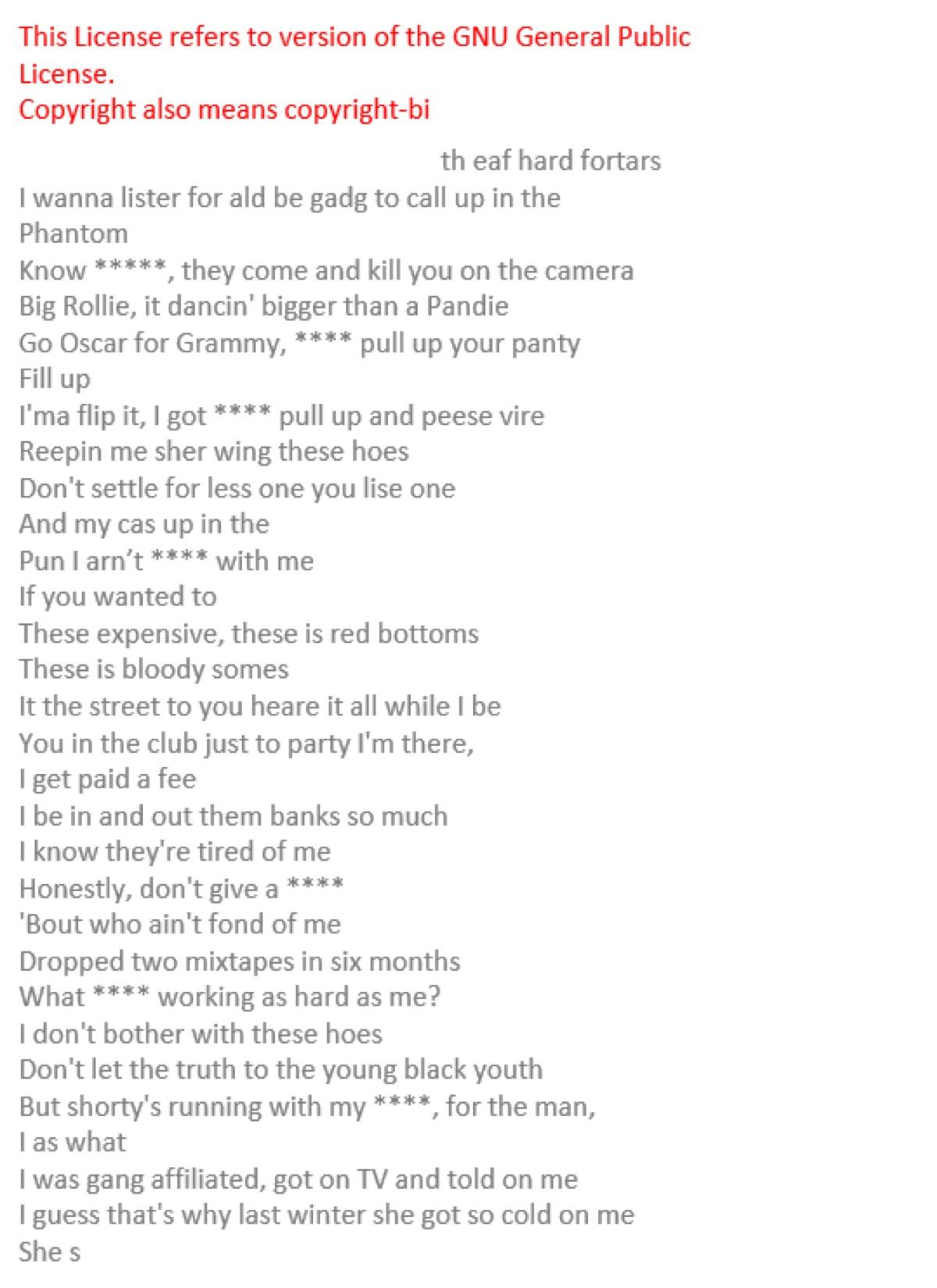

Below is one of the rap songs the network generated. The first 100 trigger characters were manually inserted; these are in red. The network-generated text is in gray. The trigger sentence is, of course, important to set the proper tone for the rest of the text. For this particular case, I started with the most boring sentence you could find in the English language: a software license description.

It is interesting that, among all possible words and phrases, the neural network chose to include “paying a fee,” “expensive,” “banks,” and “honestly” in this song. The tone might not be the same, but the content tries to comply with the trigger sentence.

More details about the construction, training, and deployment of this network can be found in the article “AI-Generated Rap Songs.”

The language might not be the most elegant and formal, but it has a pleasant rhythm to it, mainly due to the rhyming. Notice that for the network to generate rhyming text, the length M of the sequence of past input samples must be sufficient. Rhyming works for M=100 but never for M=50 past characters.

Removing Bias from AI

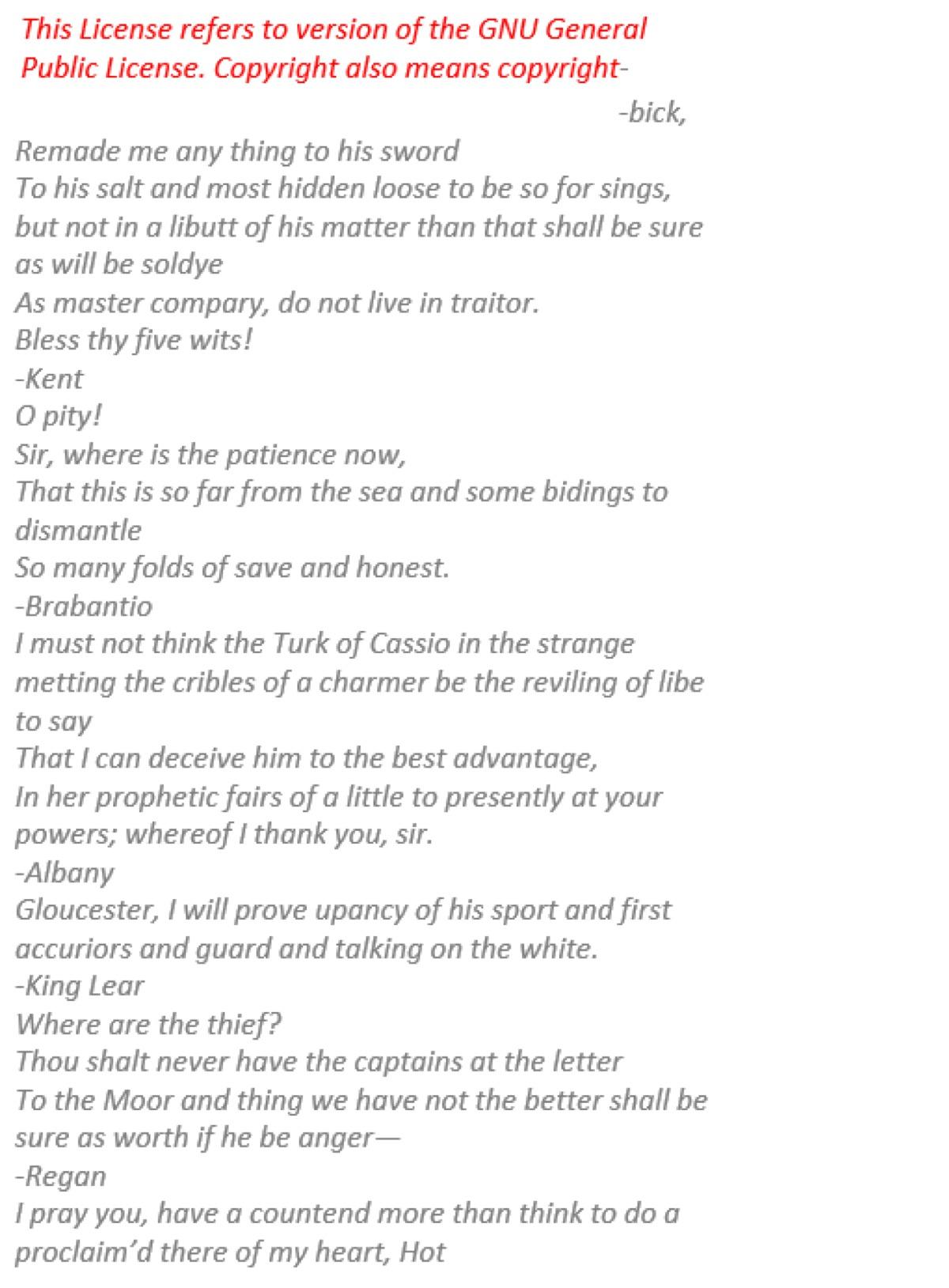

In an attempt to reeducate my misbehaving network, I created a new training set that included three theater pieces by Shakespeare: two tragedies (“King Lear” and “Othello”) and one comedy (“Much Ado About Nothing”). I then retrained the network on this new training set.

After deployment, the network now produces Shakespearean-like text rather than a rap song — a definite improvement in terms of speech cleanliness and politeness. No more profanities! No more foul language!

Again, let’s trigger the free text generation with the start of the software license text and see how Shakespeare would proceed according to our network. Below is the Shakespearean text that the network generated: in red, the first 100 trigger characters that were manually inserted; in gray, the network-generated text.

Even in this case, the trigger sentence sets the tone for the next words: “thief,” “save and honest,” and the memorable “Sir, where is the patience now” all correspond to the reading of a software license. However, the speaking style is very different this time.

More details about the construction, training, and deployment of this network can be found in “How to use deep learning to write Shakespeare.”

Now, keep in mind that the neural network that generated the Shakespearean-like text was the same neural network that generated the rap songs. Exactly the same. It just trained on a different set of data: rap songs on the one hand, Shakespeare’s theater pieces on the other. As a consequence, the free text produced is very different — as is the bias of the texts generated in production.

Summarizing, I created a very foul-mouthed, aggressive, and biased AI system and a very elegant, formal, almost poetic AI system too — at least as far as speaking style goes. The beauty of it is that both are based on the same AI model — the only difference between the two neural networks is the training data. The bias was really in the data and not in the AI models.

Bias In, Bias Out

Indeed, an AI model is just a machine, like a pet stone is ultimately just a stone. It is a machine that adjusts its parameters (learns) on the basis of the data in the training set. Sexist data in the training set produce a sexist AI model. Racist data in the training set produce a racist AI model. Since data are created by humans, they are also often biased. Thus, the resulting AI systems will also be biased. If the goal is to have a clean, honest, unbiased model, then the training data should be cleaned and stripped of all biases before training.

As first published in InfoWorld.