Scrolling through large galleries of product images to review details, categorize products, or update records is time-consuming, error prone and labour-intensive.

This challenge isn’t limited to e-commerce or inventory management. Retail teams need to update product catalogs with accurate descriptions and pricing. Fashion brands track seasonal collections and organize lookbooks. Manufacturers document product variations and quality control images. Even real estate agencies and auction houses rely on structured image data for property listings and historical archives. Whether managing an online store, maintaining an internal database, or organizing visual assets for reporting, automating this process can save time and effort.

By using KNIME’s visual workflows and GenAI, you can quickly generate structured descriptions of product images, transforming image galleries into actionable data tables ready for storage in a database.

This blog series on Summarize with GenAI showcases a collection of KNIME workflows designed to access data from various sources (e.g., Box, Zendesk, Jira, Google Drive, etc.) and deliver concise, actionable summaries.

Here’s a 1-minute video that gives you a quick overview of the workflow. You can download the example workflow here, to follow along as we go through the tutorial.

Let’s get started.

Automate product image summarization

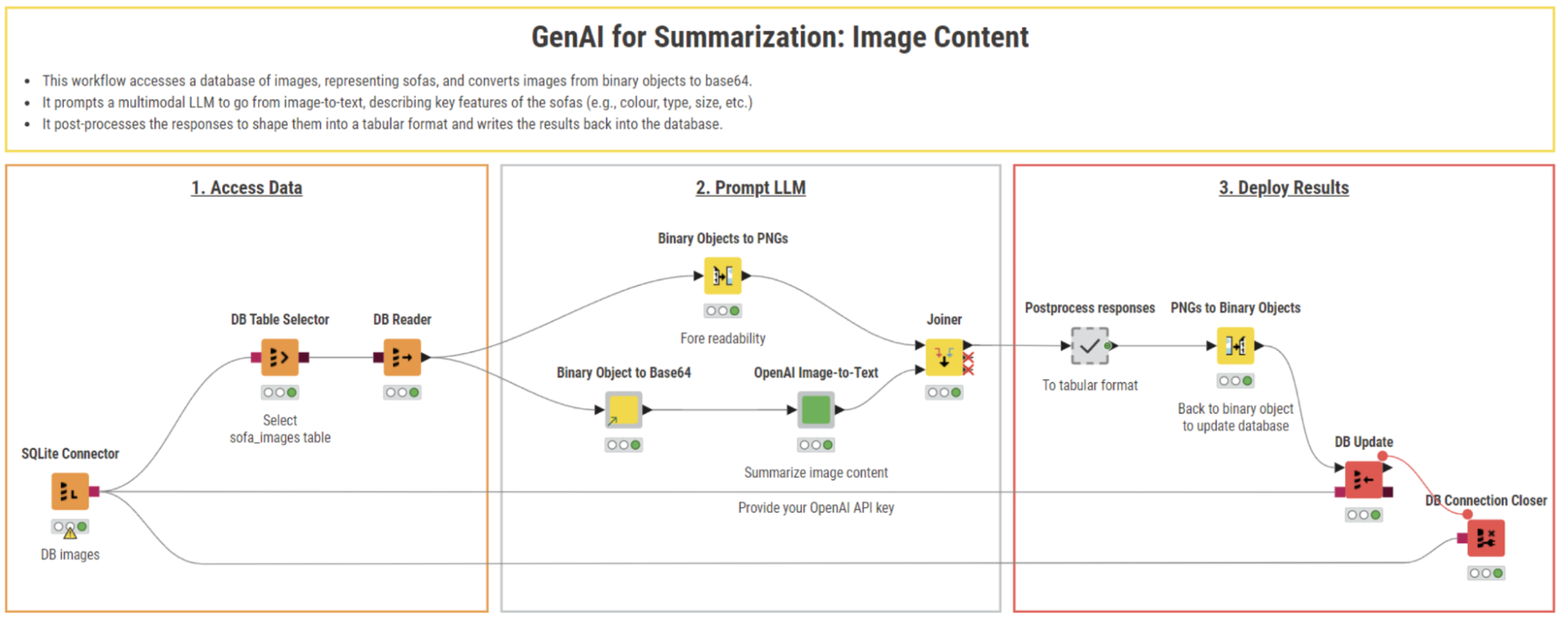

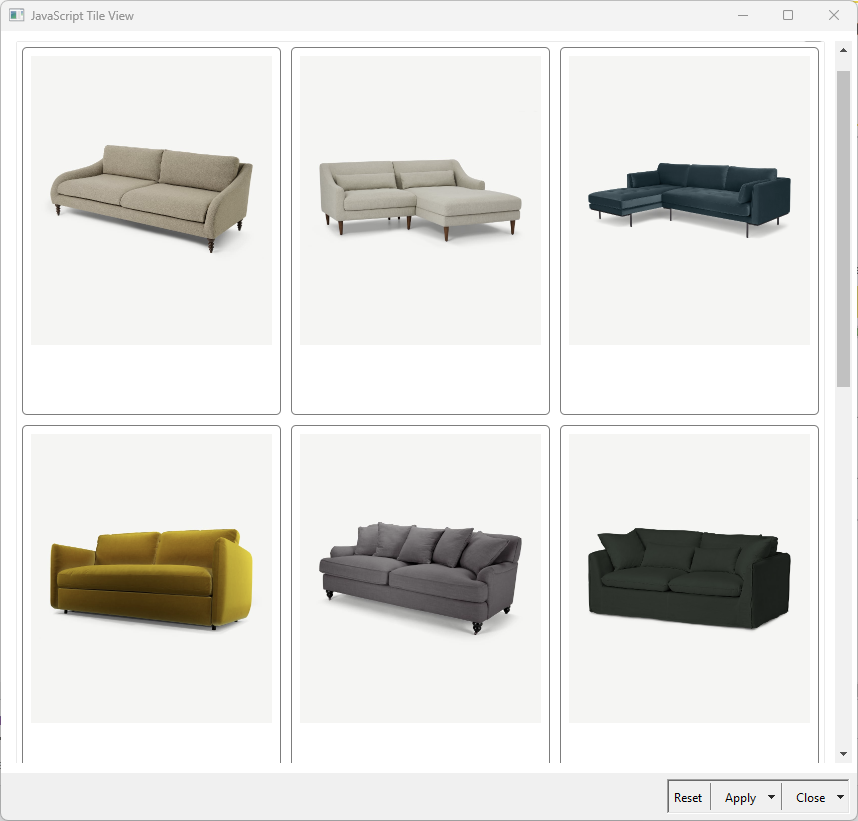

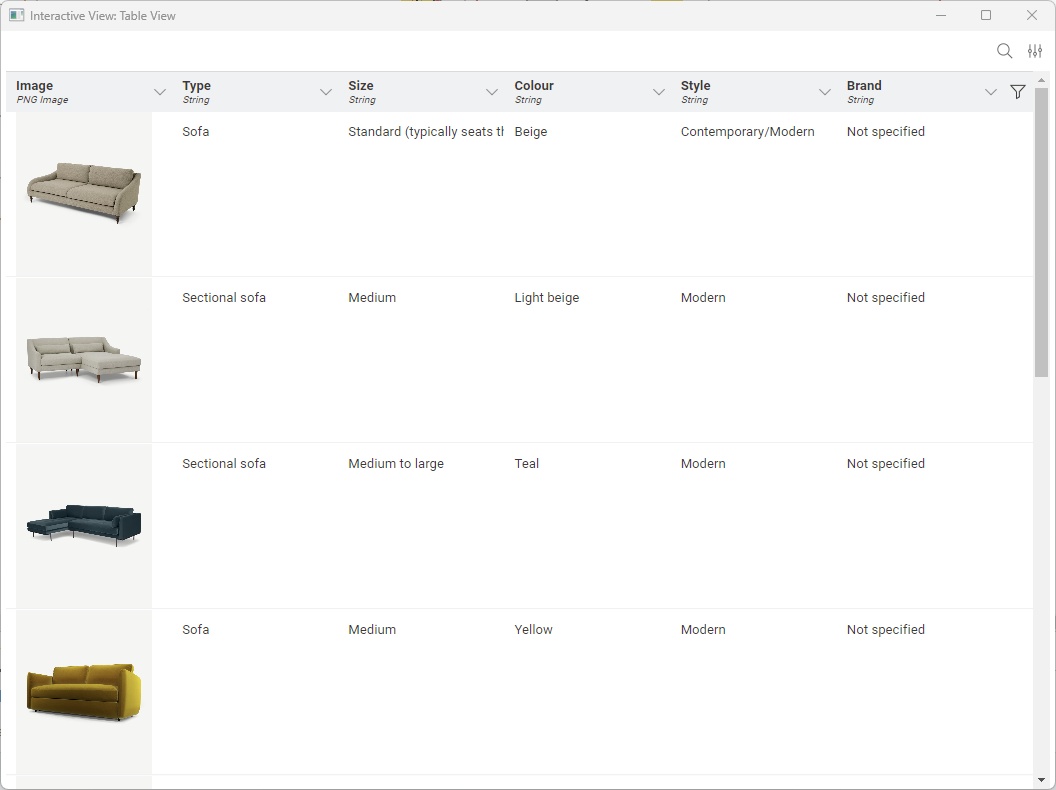

The goal of this workflow is to automatically process large galleries of product images, such as sofas, to generate concise summaries of key attributes, including type, size, color, style, and brand. The summaries are then structured into a table and stored in a database.

We can do that in three steps:

- Fetch and process product images from an SQLite database

- Use a multimodal LLM to summarize product features into a structured data table

- Store the structured data back into the database

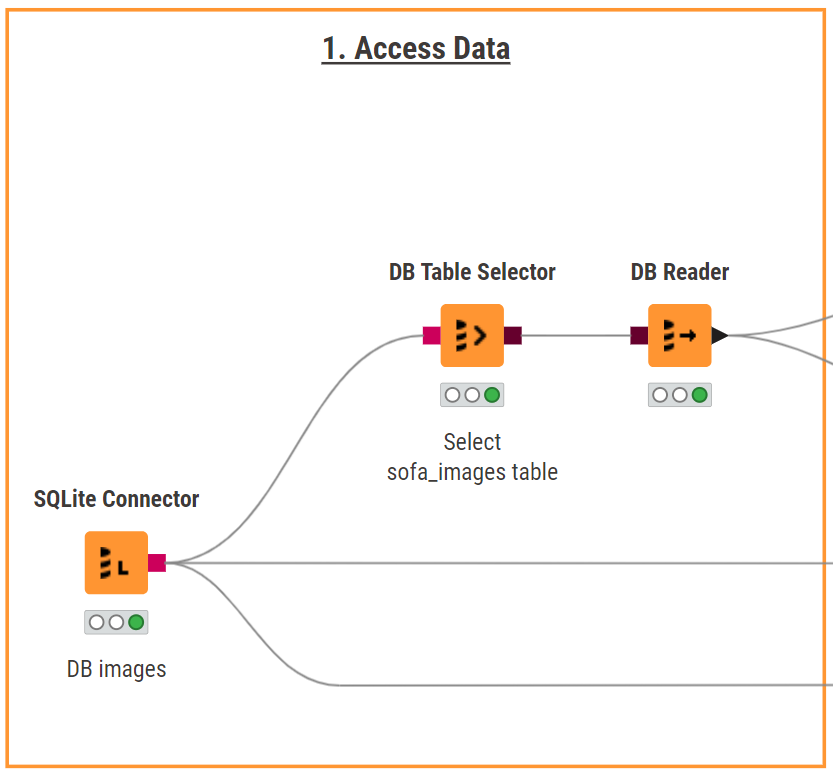

Step 1. Data access: Fetch product images from a database

We start off by fetching product images from an SQLite database. The SQLite Connector node establishes a connection to the database, while the DB Table Selector node specifies the target table. The DB Reader node then reads the images into the workflow.

In the SQLite database, product images are stored as binary objects. To convert them into image files and view the gallery, we can use the Binary Objects to PNG node followed by the Table View or the Tile View (JavaScript) node.

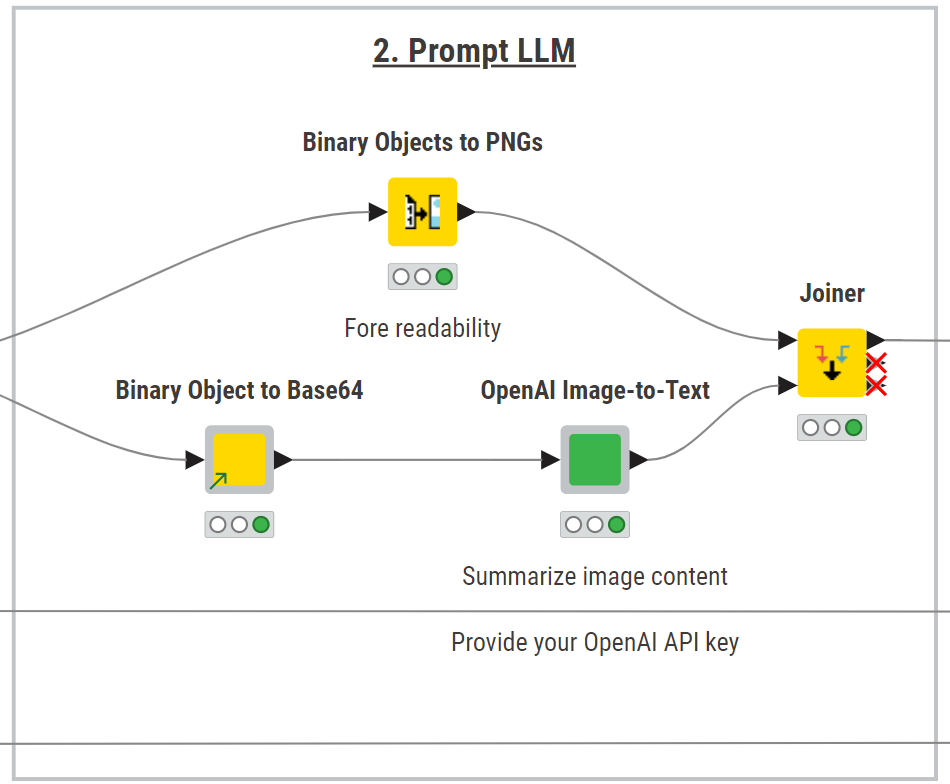

Step 2. Prompt LLM: Summarize product images with OpenAI’s GPT-4o-mini

To process and summarize image data, we need to pick the best-fit LLM for the task, balancing model capabilities, costs and performance. In our example, we connect to OpenAI’s GPT-4o-mini, a multimodal model capable of handling image-to-text (and text-to-image) tasks.

In KNIME, GPT-4o-mini can be used for image-to-text processing via the KNIME REST Client Extension, which enables queries to OpenAI’s API through POST requests. Since the model requires images in Base64 format, the Binary Object to Base64 component converts the image data accordingly.

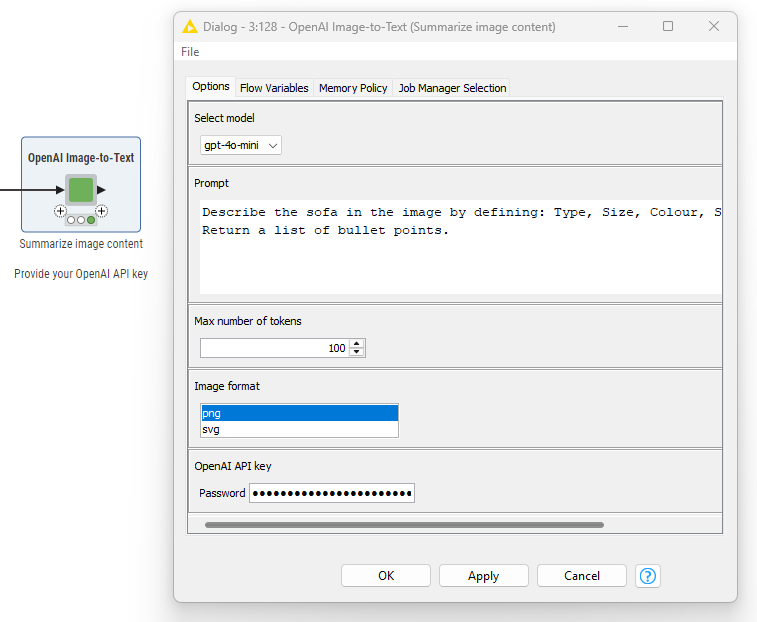

For ease of usage and shareability, the workflow encapsulates the interaction with the model in a configurable “OpenAI Image-to-Text” component. This component allows you to select OpenAI’s multimodal models, define the maximum number of tokens, select the image format, input a prompt and provide an OpenAI API key.

Here is the prompt we used:

Describe the sofa in the image by defining: Type, Size, Colour, Style, and Brand. Return a list of bullet points.

Describe the sofa in the image by defining: Type, Size, Colour, Style, and Brand. Return a list of bullet points.

Inside the component, we use the Container Input (JSON) node to provide a request body template that we populate with a series of String Manipulation nodes. These nodes dynamically add the Base64-encoded image, the formatted prompt, and token limit.

The request body is then converted into a JSON format using the String to JSON node and sent to the model via the POST Request node. The model processes the image and returns a structured response, which is parsed with the JSON Path node to extract relevant fields.

The output of the component is joined with the PNG images using the Joiner node, enabling a quick inspection of the visual references alongside the AI-generated summaries.

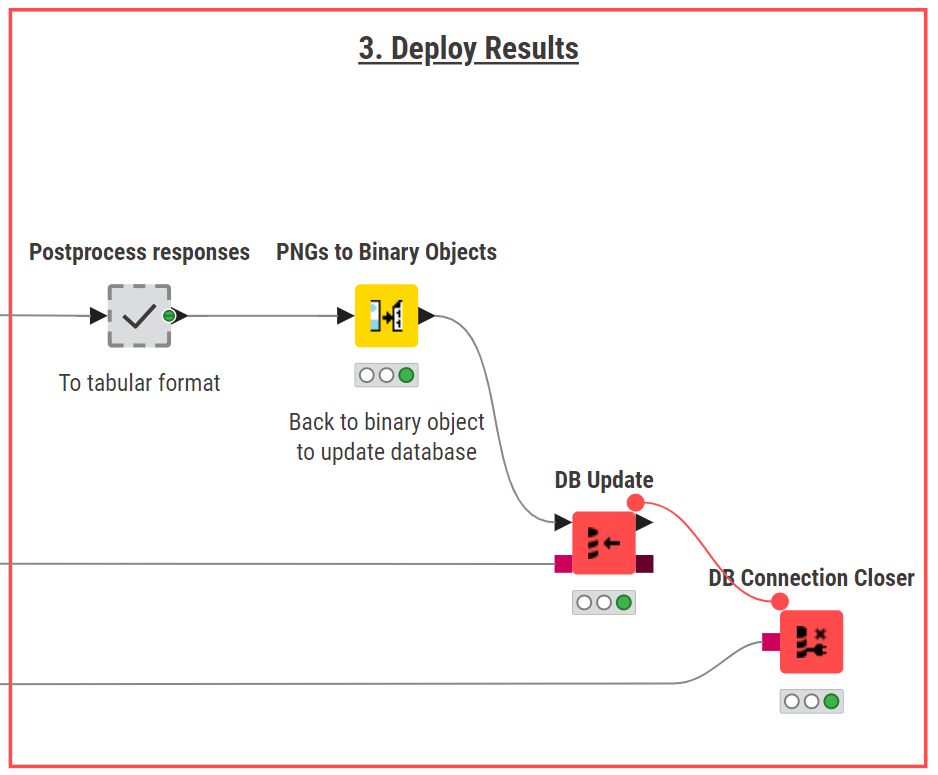

Step 3. Deploy results: Clean summaries and store them in a database

The final step involves cleaning and postprocessing the AI-generated summaries to structure them into a well-defined table.

Using a series of data transformation nodes, the workflow splits the summaries into a structured table with clearly defined columns for each attribute.

The product images are then converted back into binary objects, and the updated table is written into the SQLite database using the DB Update node. Finally, the DB Connection Closer closes the database connection.

The Result: Actionable product insights

This workflow produces a structured table containing concise summaries of product images, including key attributes such as type, size, color, style, and brand. The data is stored in the SQLite database, making it easily accessible for inventory management, e-commerce, or further analysis.

GenAI for summarization in KNIME

In this article from the Summarize with GenAI series, we showed how KNIME and GenAI can automate the summarization of product image galleries. By leveraging AI to extract and structure key product details, this workflow saves time and enhances productivity.

You learned how to:

- Fetch and process product images from an SQLite database

- Use the KNIME AI extension to summarize product features into a structured data table

- Store the cleaned and structured data back into the database

Download KNIME Analytics Platform and try out the workflow yourself!