Professionals in legal, compliance, and regulatory fields often deal with lengthy and complex EU regulations. Reviewing these documents manually is time-consuming and difficult to scale. In this article, we show how to build KNIME workflow that uses large language models (LLMs) to summarize EU regulations and automatically distribute the insights in a well-formatted PDF report via email.

KNIME Analytics Platform is an open-source data science tool that allows you to build solutions using visual workflows, eliminating the need for coding expertise.

This blog series on Summarize with GenAI showcases a collection of KNIME workflows designed to access data from various sources (e.g., Box, Zendesk, Jira, Google Drive, etc.) and deliver concise, actionable summaries.

Here is a 1-minute video that gives you a quick overview of the workflow. You can download the example workflow here, to follow along as we go through the tutorial.

Let’s get started.

Automate EU regulation summarization and distribution

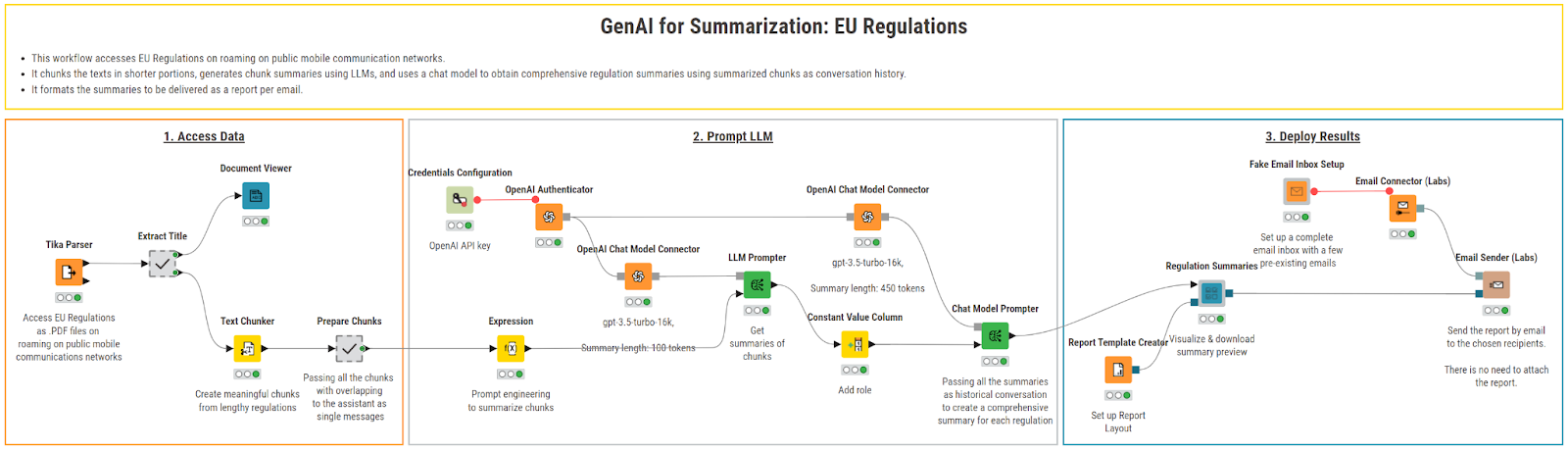

Our goal is to simplify the process of analyzing EU regulations by automating content extraction, summarization, and reporting. Thereby, enabling professionals to quickly access structured summaries of complex regulations without manually sifting through lengthy texts.

We can do this in three steps:

- Access and parse EU regulations as PDF files

- Check texts and summarize them using an LLM

- Compile summaries into a PDF report and send it via email

This provides professionals with an efficient way to stay informed about relevant regulatory changes, without manually reviewing long documents.

Step 1. Access data: Import and chunk lengthy regulation texts

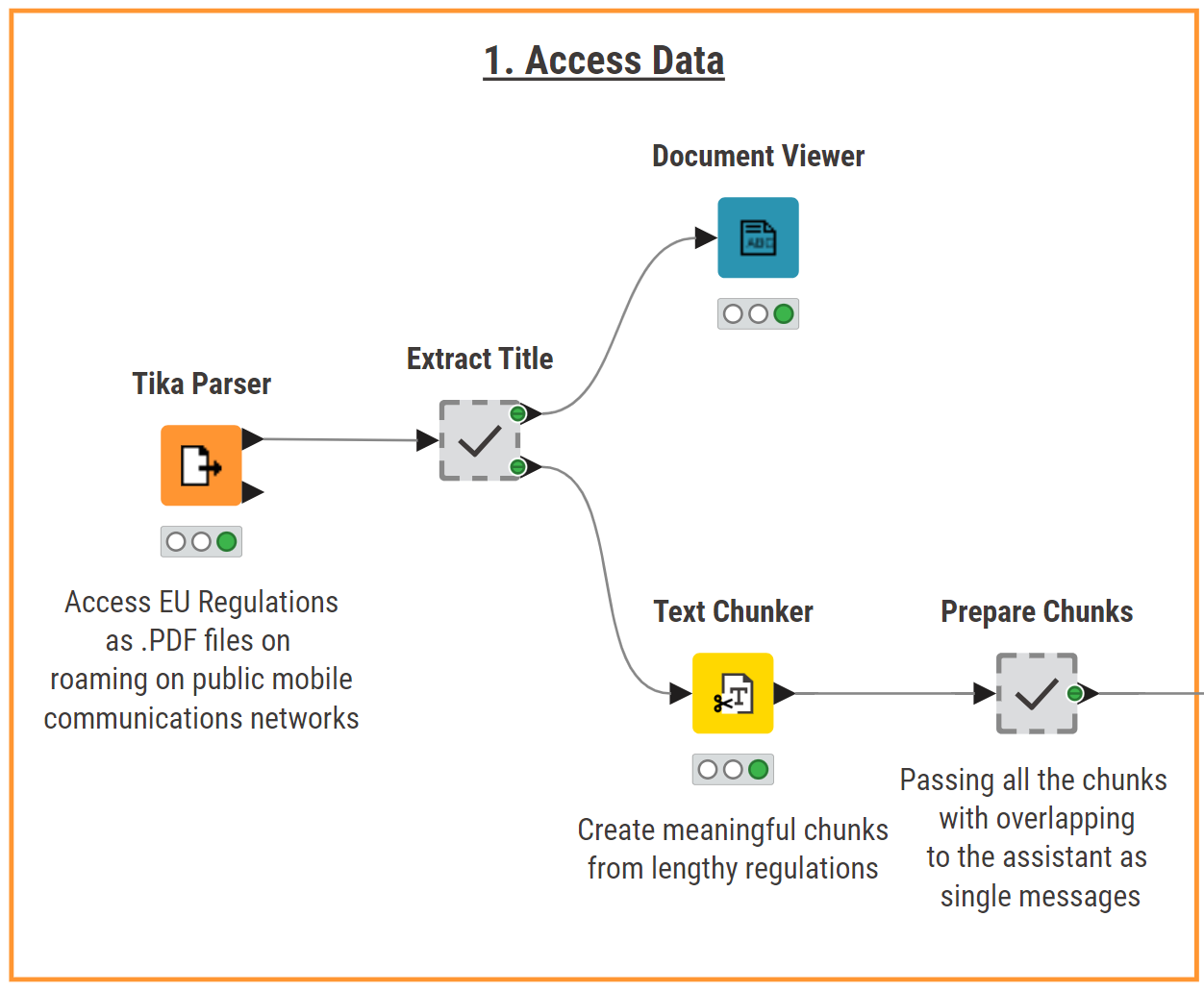

We begin by accessing and parsing two EU regulations (2015/2120 and 2022/612) on roaming on public mobile communication networks stored as PDF files. The Tika Parser node reads in and converts the PDF files into machine-readable text, extracting both the document content and metadata.

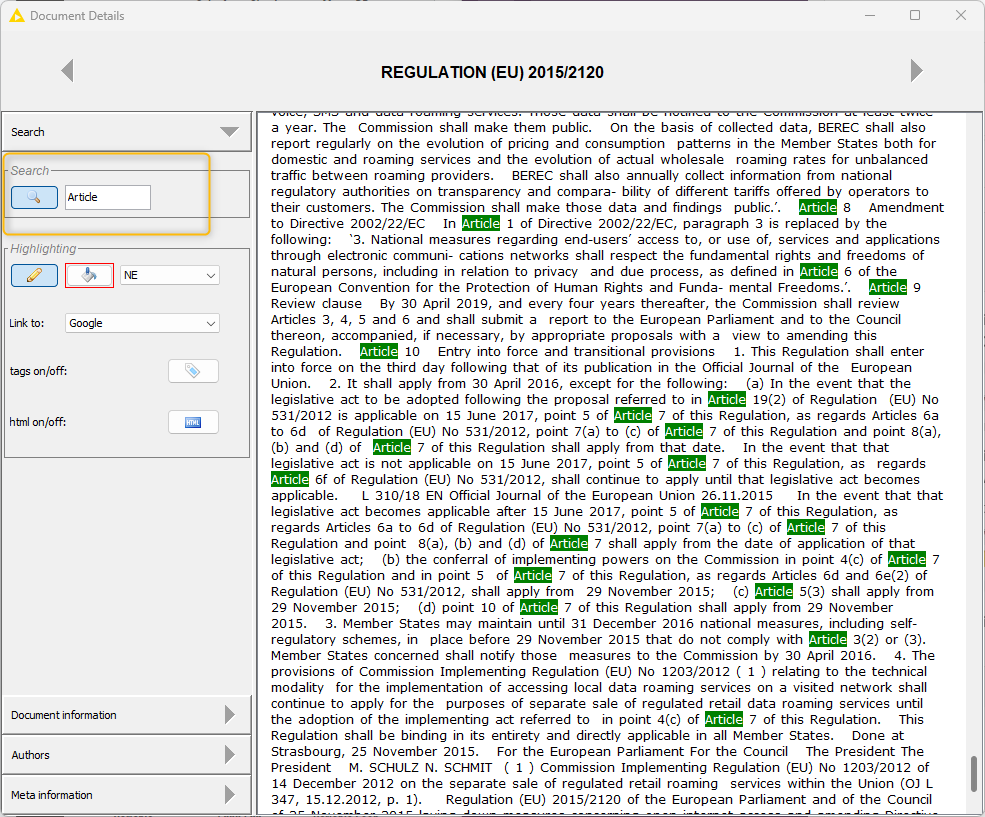

The “Extract Title” metanode isolates regulation names from the file paths and converts the text into a Document-type object. In this way, we can inspect the full text of each regulation using the Document Viewer node and also easily search for specific terms (e.g., the word “Article”).

Since the documents are long, we split them into smaller chunks to avoid exhausting the LLM’s context window.

The Text Chunker node divides the full text into overlapping segments, for example, 3,000 characters per chunk with 300 characters of overlap. Overlapping is important for maintaining context across boundaries and generating coherent summaries. Without overlaps, key details at chunk boundaries could be missed, leading to fragmented or misleading summaries.

Next, in the “Prepare Chunks” metanode, we format text chunks into numbered inputs for each document. Each chunk is labeled (e.g., “Chunk 1: 26.11.2015 EN Official Journal of the European Union L 310/1 I (Legislative acts) REGULATIONS…”.), ensuring each chunk is a self-contained message, ready for summarization in the next step.

Step 2. Prompt LLM: Summarize regulations with OpenAI’s GPT-3.5-turbo-16k

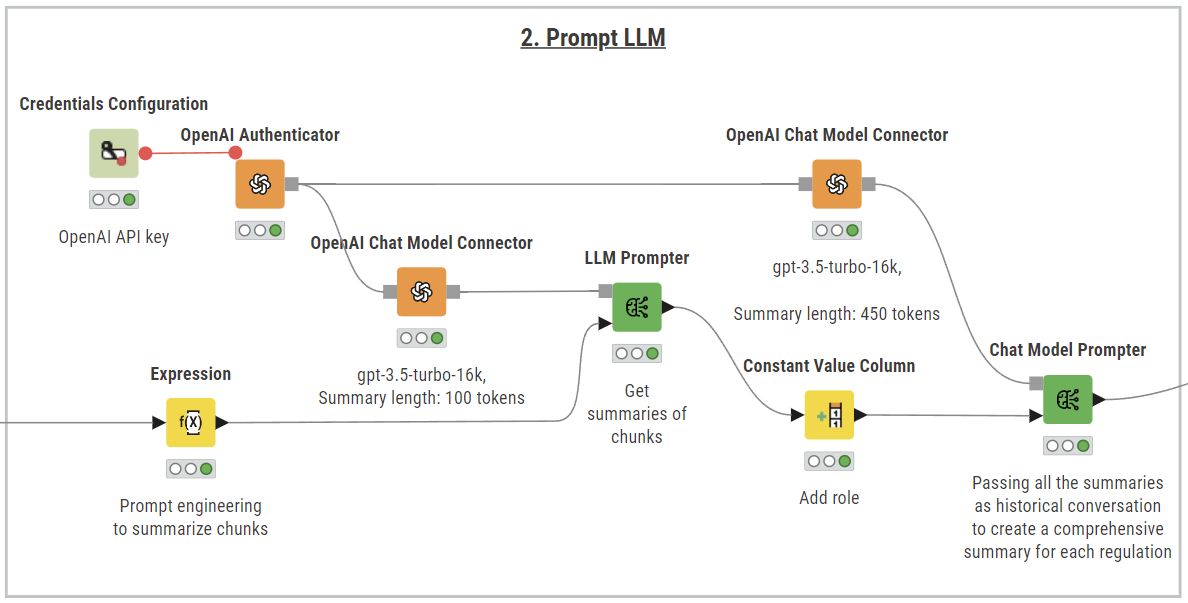

To summarize the regulations, we use the KNIME AI extension and select the most suitable LLM for the task, balancing both cost and performance. For example, OpenAI’s GPT-3.5-turbo-16k is a good option for its large context window that allows us to process longer texts and at low cost.

To set up the connection, follow these steps:

- Input the OpenAI API key in the Credentials Configuration node

- Authenticate to the service with the OpenAI Authenticator node

- Use the OpenAI Chat Model Connector node to connect to the GPT-3.5-turbo-16k model

Despite the model choice, prompting an LLM with lengthy texts requires a smart workflow design to avoid exhausting the model’s context window. In particular, we use a two-stage summarization approach:

- Stage 1: It generates summaries of the individual text chunks

- Stage 2: It uses these summaries as context to generate a final, comprehensive regulation summary.

This method uses tokens efficiently and preserves context across the full document.

Stage 1: Summarize individual text chunks

We create a parameterized prompt containing the regulation name and the overlapping text chunks, and use the LLM Prompter node to query the model. Here’s the prompt we used:

join("\n\n", "You're a legal expert in EU law. Summarize the following chunks for each regulation.

There is some overlap between the chunks to not lose the context:", $["Title"],$["Chunks"])

By summarizing at chunk level first, we ensure that each output is considerably shorter and can be more easily processed in Stage 2.

Stage 2: Generate a comprehensive summary of each regulation

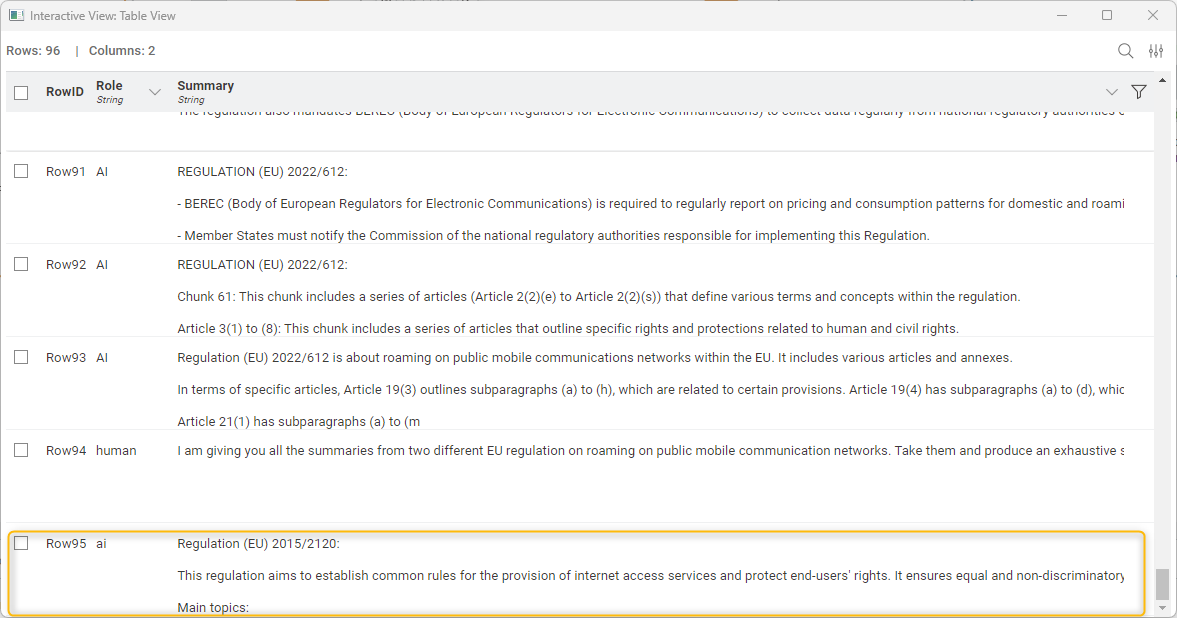

The second query uses the chunk-level summaries to create a final summary for each regulation. That is, we need to ensure that each chunk is not processed independently from the others; instead all chunk summaries should be fed to the model at once, preserving the context.

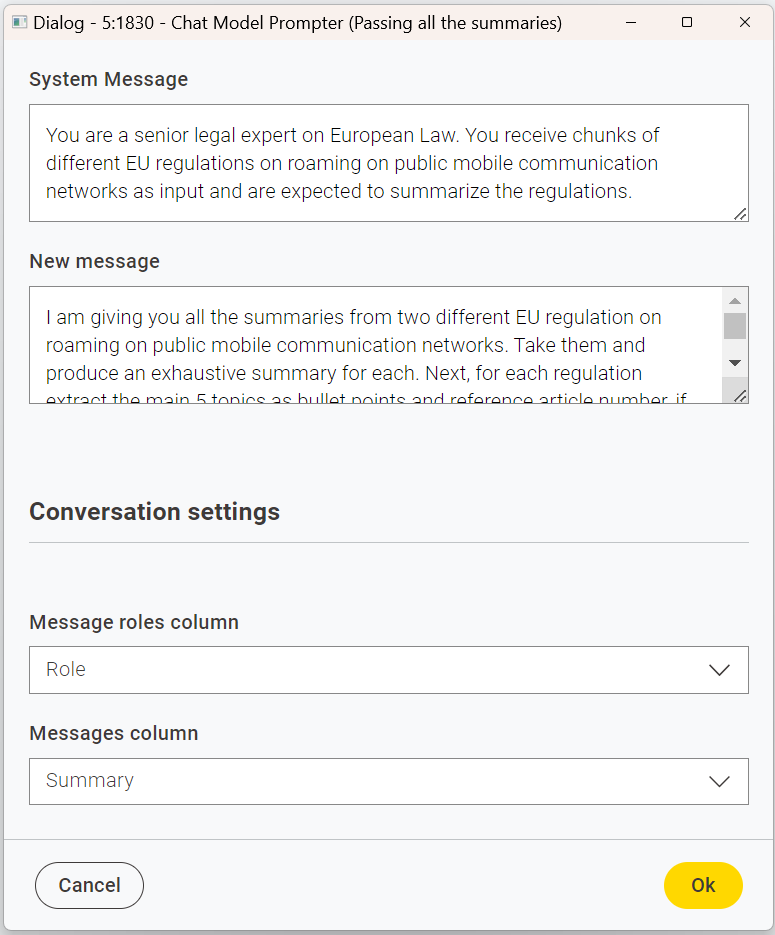

To do that, we use the Chat Model Prompter node, not to simulate a real chat, but to feed prior chunk summaries as “conversation history.” Practically, this means:

1. In the “Conversation settings”, select chunk summaries in the Message column.

2. In the “Configuration settings”, specify the roles in the interaction (human or AI). To do that, drag & drop the Constant Value Column node and add a column named “Role” with the value "AI", identifying AI as the source of the chucks

3. Add your final summarization prompts in the “System Message” and “New message” fields.

- New message: it provides direct instructions for the final summarization:

I am giving you all the summaries from two different EU regulations on roaming public mobile communication networks. Take them and produce an exhaustive summary for each. Next, for each regulation, extract the main five topics as bullet points and reference article numbers, if pertinent.

- System Message: it is similar to a prompt but it’s typically the first message given to the model describing how it should behave, assigning it a persona and clarifying the task (see best practices for prompt engineering):

You are a senior legal expert on European Law. You receive chunks of different EU regulations on roaming on public mobile communication networks as input and are expected to summarize the regulations.

The output of the Chat Model Prompter is then post-processed to retain only the AI-generated summaries of the regulations.

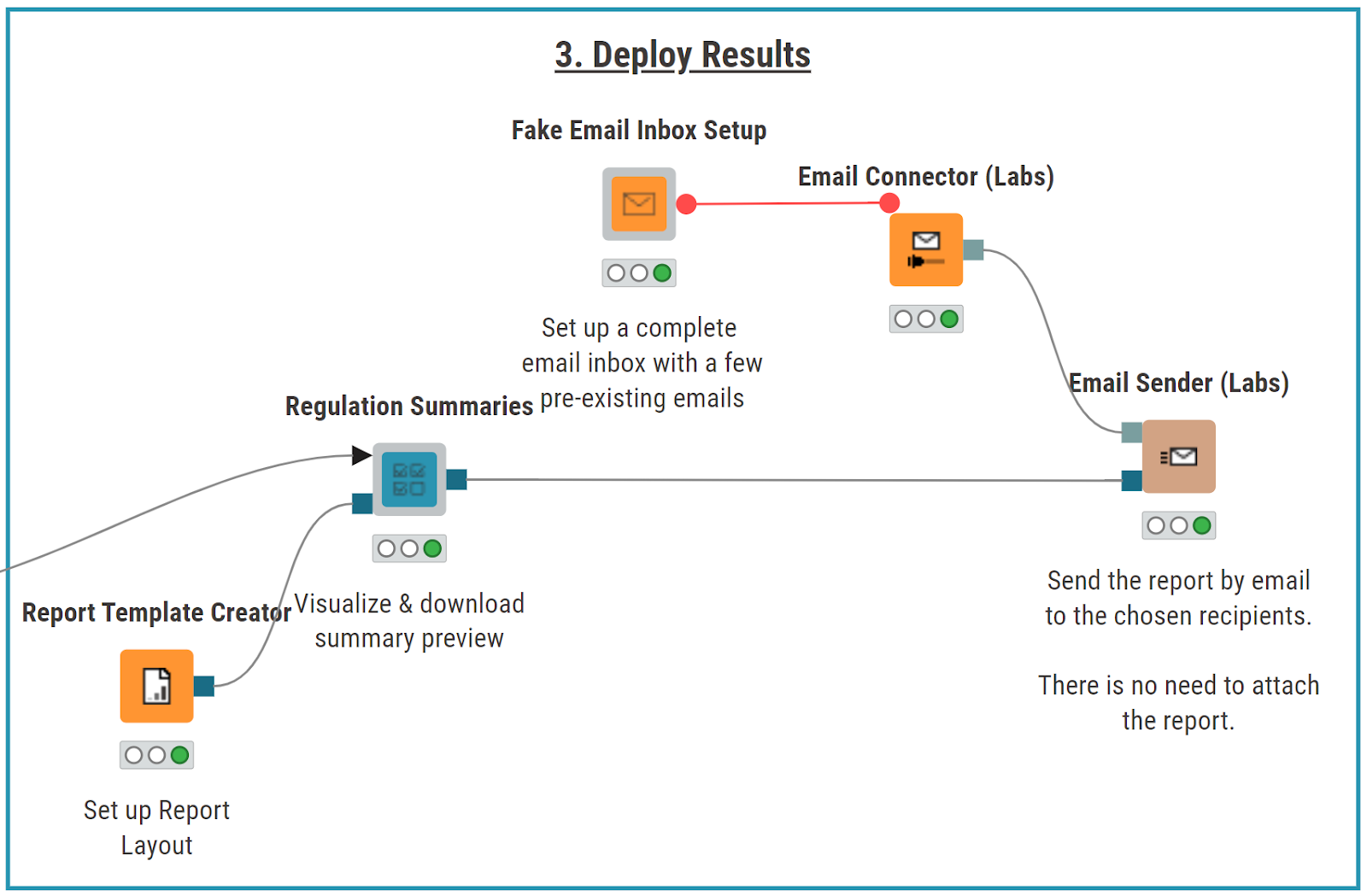

Step 3. Deploy results: Create a PDF report and distribute via e-mail

The final stage involves compiling the summaries into a report and distributing it automatically per e-mail.

To create a static and properly formatted PDF report, we use the KNIME Reporting Extension:

- Use the Report Template Creator node to define page size and orientation

- In the “Regulation Summaries” component, display summaries using Table View and add hyperlinks to the full regulations for deeper reading

- Include headings and design a friendly layout to improve readability.

To distribute the report automatically via email:

- Use the Email Connector and Email Sender nodes

- Enable the blue port of the Email Sender node to attach the generated PDF report and send it to the desired recipients.

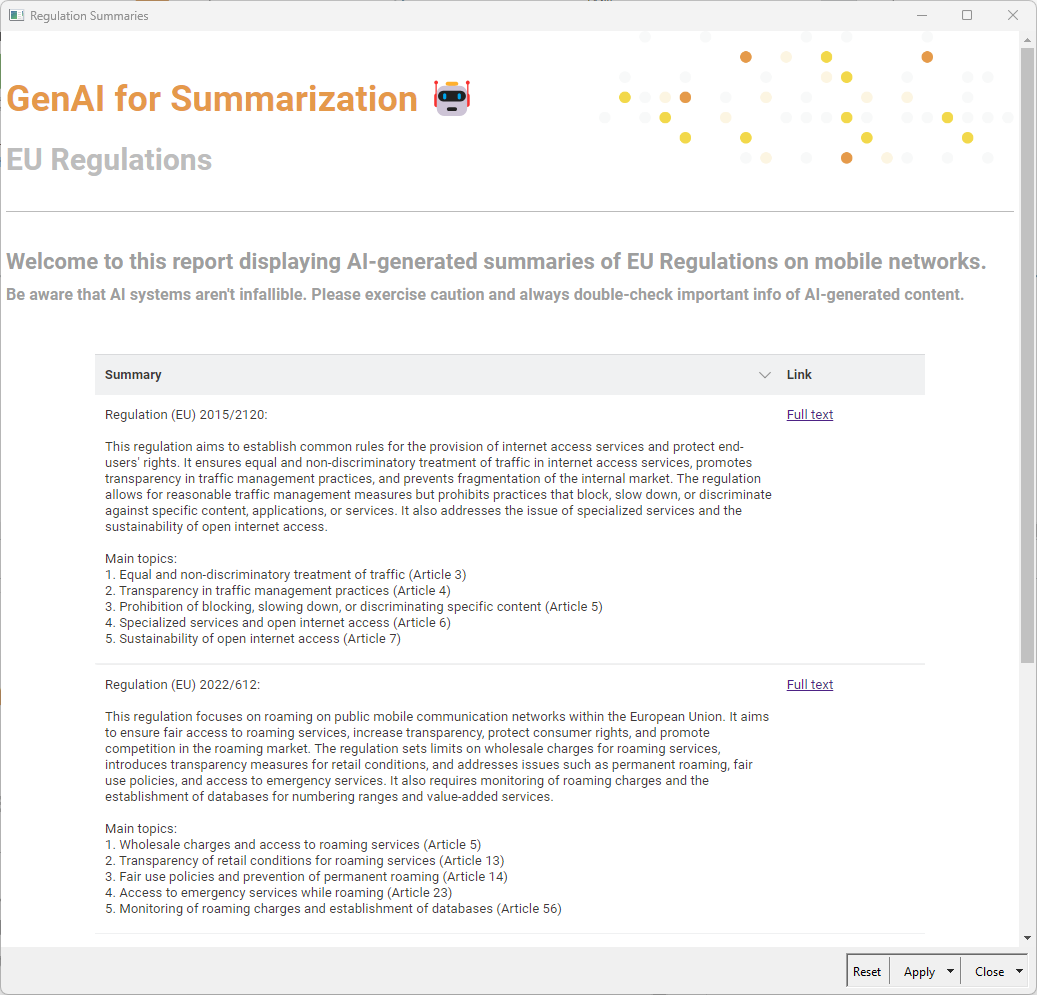

The result: Key insights from EU regulations delivered in your inbox

The final output is a professional and clearly structured PDF report containing concise summaries of the EU regulations, as well as numbered lists of the main topics.

This process ensures fast document overviews, automates regulatory analysis and reduces time spent on manual review.

GenAI for Summarization in KNIME

In this article from the Summarize with GenAI series, we explored how KNIME and Generative AI can automate the summarization and distribution of complex and lengthy EU regulations. With KNIME, you can integrate LLMs into visual workflows, without coding and streamline processes, reduce manual review and improve efficiency across industries.

You learned how to:

- Access and parse EU regulations PDFs

- Summarize them using an LLM

- Deploy results into a static report and distribute it via email

Download KNIME Analytics Platform and try out the workflow yourself to see how KNIME can streamline your document summarization tasks.