Meet two text mining experts in today’s interview, which explores some of the common issues faced by data scientists in text analytics. Prof. Dursun Delen and Scott Fincher are the teachers of the [L4-TP] Introduction to Text Processing course regularly run by KNIME. This course is based on the KNIME Text Processing Extension.

Prof. Dursun Delen is the Holder of William S. Spears Endowed Chair in Business Administration, Patterson Family Chair in Business Analytics, Director of Research for the Center for Health Systems Innovation, and also Regents Professor of Management Science and Information Systems in the Spears School of Business at Oklahoma State University (OSU).

Scott Fincher is a data scientist on the Evangelism team at KNIME and one of the biggest contributors to the KNIME Forum

The questions in this interview were all devised by Rosaria Silipo, who has been a researcher in applications of data science and machine learning for over a decade. She is Head of Evangelism at KNIME.

Text Mining is experiencing a surge in popularity, mainly due to the development of more advanced chatbots, advances in deep learning architectures applied to free text generation, and the abundance of text data generated every day from web applications, ecommerce, and social media.

In this interview, we would like to dig deeper into some common problems that data scientists face when analyzing text documents e.g., blending data sources, language specific processing, minimum amounts of data, and other commonly posed questions around text mining techniques.

Collect Data for a Text Mining Project

Rosaria: I would like to start from the beginning of all data science projects. Where can I get the data for a text mining project? In particular, how would you proceed if the information is not contained in one location only, but distributed across many websites and blog posts?

Scott: There are many repositories for text data, often organized around specific topics. One great data repository for beginners is probably Kaggle. There are a number of datasets in there that can be used to take the first steps in text mining.

Now for the second question. Depending on where your data is located, you have several options for bringing them into a KNIME workflow.

For flat files, you can use the File Reader node as a start for individual files, and the Tika Parser node for reading large groups of documents - for example if you have folders full of Word or PDF files. The Tika Parser node, especially, is very flexible and can read a large variety of data files and formats.

If your data is stored in a database, KNIME Analytics Platform has nodes to access most of them via the Database Extension.

You can also access data stored on web sites using the Webpage Retriever, or via REST API with a GET Request node. There are even nodes for accessing tweets directly from the Twitter API. One of the strengths of KNIME Analytics Platform is its ability to pull data in and blend them from a wide variety of sources.

Rosaria: Do you have examples for grabbing data, for example from pdf and docx files or from web scraping?

Scott: Sure! We have several workflows available to help you get started. As an example, we have a workflow that demonstrates accessing data from both local MS Word files and remote web pages, and how you might combine the two together. It’s available on the KNIME Hub as Will they blend? MS Words meets Web Crawling, along with lots of other examples. The KNIME Hub is a place to access workflows, components, and extensions built by both KNIME and the wider user community. You can search on keywords for particular use cases, algorithms, or whatever is of interest to you. Maybe the best part is that, if you come up with a really great solution that you think would benefit everyone, you can upload it yourself and share it with the community!

Rosaria: And what about connecting to repositories on the cloud, like S3?

Scott: We also have several different nodes available to help you easily access cloud resources, whether you prefer Amazon, Azure, or Google Cloud. Because accessing different sources is such a common need, we recently updated and published a collection of blog posts in the Will They Blend? booklet on our KNIME Press site. This book is freely available, and focuses on interesting ways to combine all kinds of datasets from a variety of sources, with plenty of example workflows for you to download and explore.

Text Processing and Dictionaries

Rosaria: Let’s move on to the next step in a text mining project: text processing. What does “tokenization” refer to?

Dursun: Tokenization is the process of separating a textual document into its low-level units, such as words, numbers, characters, etc.

Purchase the ebook From Words to Wisdom from KNIME Press

Rosaria: What tokenizer should I use then?

Dursun: The basic tokenizer algorithms are available in the Strings to Document node (OpenNLP English Word Tokenizer, OpenNLP WhiteSpace Tokenizer, Stanford NLP Tokenizer, etc.). Some of these simple tokenizers are English language specific, some are language agnostic white-space driven. More sophisticated tokenization algorithms can be found only for some languages. My recommendation is use the basic ones, if nothing else is available.

Rosaria: Is there a node that can remove punctuation characters?

Scott: Yes - it’s the Punctuation Erasure node. This is one of the easiest nodes to use in the KNIME Textprocessing extension because there’s almost no configuration for it - you just apply it to your documents and continue with your processing. You may find sometimes that you still have hyphens, for example, in some of your tokenized words even after punctuation removal - if that’s the case, you may need to revisit your tokenizer algorithm selection.

Rosaria: How do you create a dictionary, like for example a stop word dictionary?

Scott: The Stop Word Filter node comes with its own internal dictionary for several languages, but if you want to provide your own, that’s no problem. Just create a table consisting of a single column, with one stop word per row, and feed that into the optional input port of the node. You can use this same approach to create custom dictionaries for other nodes that might need them - for example, the Dictionary Tagger node.

Text Mining Examples

Rosaria: This is a classic question: What are the most common use cases requiring text mining?

Dursun: Probably the most popular use cases for text mining nowadays are Sentiment Analysis and Topic Detection. Use of text mining to extract knowledge from social media (tweets) or from customer reviews of products and services are very popular. Also of interest is classification of documents - this is similar to the supervised version of sentiment analysis, but the classes are not just limited to degrees of positive and negative feeling. One area where text mining is showing up in academic circles is Literature Mining. Here, the idea is to discover latent topics from a collection of papers and then look at their dominance overtime and at their relationship to journals and disciplines.

Rosaria: Can you recommend a few use cases for beginners?

Scott: Sure! Sentiment analysis is a good starting point. Conceptually it’s fairly easy to grasp, but at the same time can be treated with different degrees of complexity: running a lexicon based analysis, implementing a classic supervised machine learning approach, or for the more experts using a deep learning network. These three linked examples all analyze IMDB movie reviews and try to classify them as positive or negative.

Another use case that could be approached by a beginner is topic extraction, maybe with the help of the LDA algorithm. Here is an example from the KNIME Hub titled “Topic Extraction”, using the Parallel LDA node to mine topics from Tripadvisor restaurant reviews.

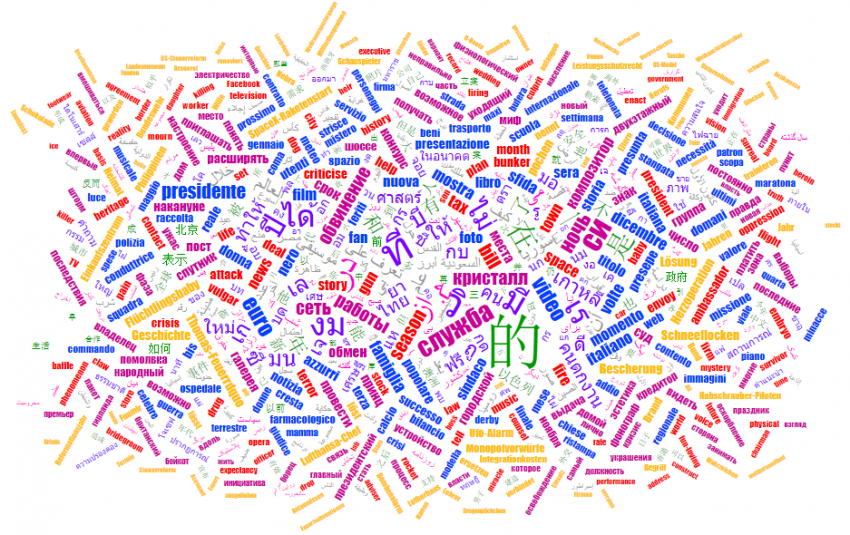

If you’re interested in importing data from Twitter and doing some exploratory visualization using a word cloud, this other simple workflow, titled “Interactive Tag Cloud from Twitter Search”, can show you how that might be done.

Check out a free sample chapter from the ebook Practicing Data Science from KNIME Press.

Rosaria: Let’s take topic detection, then. What is the most common algorithm used to extract topics (unsupervised) from a text?

Dursun: This is probably LDA, where LDA stands for Latent Dirichlet Allocation. LDA produces a pre-determined number of latent (or hidden) topics from a collection of documents. The output is two-fold: first, a distribution of topics that defines a document, and second, a distribution of words/terms that defines a topic. The Topic Extractor (Parallel LDA) node produces both of these outputs as separate tables.

Rosaria: Talking about LDA, here’s a question that comes up often. How large does the document collection need to be to apply LDA?

Dursun:The short answer is “large enough but not too large”. If the computational power is not an issue, and the documents are coming from a representative application domain, then larger is better. As a rule of thumb, in text mining and in topic detection, large quantities of smaller size documents tend to produce more reliable and consistent results.

Rosaria: Are there alternatives to LDA, in case LDA has limited success?

Dursun: LDA has been the latest and greatest of all topic detection methods until recently. Nowadays there are newer methods like Word2Vec, WordEmbedding and Deep Learning (using RNNs/LSTMs) that take text mining and topic modeling to a new dimension, by including the contextual/positional information from the sequential nature of language. About 15 years ago, I used simple clustering on raw term-document matrices (TDM) and then repeated it on SVD (Singular Value Decomposition) values for literature mining. It produced pretty good results for that time and the study was published in a high impact academic journal.

Rosaria: Let’s move to other use cases. For example, what would be an approach to mining contract language to understand terms and conditions?

Dursun: I have not done anything in the domain of contracting. I heard of interesting applications in patent mining, text mining of law/case records, literature mining in genomics/biomedicine, and in COVID-19. Within 3 months, almost 200K of published articles on COVID-19 have appeared. You cannot manually process such a large collection of articles. With a few colleagues of mine, we are text mining this large corpus for extraction/discovery of meta-information.

Rosaria: Any advice for classification of an email corpus?

Dursun: Email was the first practical application for text mining. Spam filtering, subject and priority detection, topic based classification and labeling of email have been the main applications. There were also more sophisticated attempts, where text-based deception detection is used to discern truthful emails from fraudulent ones.

Rosaria: What is the best approach to interpret the extracted topics? For example, by checking the movie topics, can I extract their genre?

Scott: This is the art part of text mining and topic modeling. The common practice is to look at the most dominant (or highest weighted) words in the word distribution of a topic and label it with some meaningful description. Here, knowledge by domain experts is the milestone to aggregate the high-level concept from a few dominant keywords.

Rosaria: Let’s move onto sentiment analysis. What are the classic approaches for sentiment analysis? And what are the advantages/disadvantages for each one of them?

Scott: The simplest method is one we might call the lexicon-based approach, where we take lists of positive and negative words and use them to tag the words in our documents. Then we can count the tagged words to calculate an index score that would indicate how negative or positive the documents are in a relative sense. The advantage of this approach consists in not needing labeled (or supervised) data ahead of time - we can simply apply our lists as tags and calculate. The downside is that, because of its simplicity, it doesn’t always produce the most accurate results.

If we do have labeled data, we can use a machine learning approach, where we preprocess our documents and transform them into a numerical representation - a term-document matrix. Once we have this, any of the standard Machine Learning (ML) algorithms can be used for classification, like decision trees or XGBoost. This method often performs relatively well, so long as labeled data is available.

Given enough computing resources, we could even take a deep learning approach to classification, by building out a multi-layer neural network. This requires some dedicated processing of the data and fairly large datasets to perform well. In some cases, provided your project meets the criteria, this can perform exceptionally well, but it will definitely take longer to set up and execute than a standard ML approach.

Examples of all three of these methods for text classification are available for you to explore on the KNIME Hub.

- Lexicon Based Approach for Sentiment Analysis

- Machine Learning Approaches: Decision Trees

- Deep Learning Approaches: multi-layer neural network

Rosaria: The evergreen question. What to choose: statistical traditional methods or deep learning methods? How can we choose the best method?

Scott: Tough one! You never know until you try - each dataset and corpus is different. In practice, you often find that the extra data pre-processing and computational expense required for deep learning doesn’t justify the marginal improvement in model performance. But if you really do need that few extra percentage points of accuracy and like a challenge, give it a shot!

Languages and Specific Domains

Rosaria: Some frequently asked questions are usually about applications for specific domains, like in specific languages. Here is one such question. Most work in text mining is for the English language. If the text is in Spanish, would LDA still work? Or do I have to translate it first?

Dursun: I have never used text mining on languages other than English. That said, since text mining is mainly syntactic, that is, it does not get the semantic meaning of the words or sentences, if the right enrichment and preprocessing nodes are applied to identify the value added words and exclude language specific stop words and punctuations, then LDA should work fine.

Rosaria: How can you best apply text mining practices in healthcare/electronic health records for better patient-care?

Dursun: I have been conducting analytics research in the fields of healthcare/medicine for over 15 years. My first highly cited paper in this domain was published in 2005 (Predicting breast cancer survivability: a comparison of three data mining methods). Although I have used all kinds of healthcare/EHR data (publically available, proprietary--obtained from Cerner over 70M unique patient records), I have never used textual data, including doctor or nurse notes. The main reason is that this type of data is very hard to sanitize from private patient information (e.g., required for HIPAA and other governmental regulations). One exception is a new project that I am working on with a medical doctor from NY. We are mining the doctors’ and nurses’ clinical notes from emergency rooms visits to identify patterns to accurately predict the patients who are likely to come back to the ER within 72 hours (similar to readmission, but it is commonly called “bounceback”).

Rosaria: Is there an easy way to do domain specific named entity recognition?

Dursun: Ultimately you will need a domain specific dictionary.

Rosaria: How do you address NLP when your dataset contains many languages?

Dursun: You could perhaps do language translation first so that the whole collection of textual content would be in the same language (most likely in English), and then mine it using NLP functions. Or, you can just use it as is, since text mining and most NLP tasks are syntactic in nature. This means that they are agnostic to the semantic nature of the language or meaning. Then, perhaps the interpretation of the output information will be harder because it will include terms and concepts from multiple languages. In our COVID-19 literature mining project, we have been facing this exact problem. Some literature was published in languages other than English. We tried both approaches, and finally, we settled on converting and using all of the textual content in English.

Deployment of Text Mining Workflows

Rosaria: Is there a way in KNIME for quick and safe deployment of the text mining workflows?

Scott: KNIME Integrated Deployment allows you to automatically create production workflows safely from the prototype workflow. Essentially, Integrated Deployment captures those portions of the prototype workflow needed for deployment, and these captured portions are automatically replicated and sewn back together to create the deployment workflow. In this way, the prototype workflow and the deployment workflow are always in sync, and you don’t have to manually copy-and-paste nodes from one workflow to the other. For more information, you can check out our collection of articles about Integrated Deployment post, along with the corresponding examples on the KNIME Hub.

Rosaria: Is it possible to export workflows as REST services or web-applications?

Scott: Once your deployment workflow is ready, you can run it standalone on KNIME Server either manually, or via scheduling or triggering options. You can even call KNIME workflows from other external applications using the KNIME Server REST API.

Rosaria: How stable is a text mining model over time?

Dursun: Well, the world changes, so at some point the model will not fit anymore. Some models may work fine for a few months or even years, and then they start to falter. In the case of analyzing movie related data and reviews, considering to predict the financial success of a movie project as an investment (the average movie costs $200M to make) with a high percentage of failure (65% of the movies losing money), the model accuracy is important - hence the models should be built with the latest data and ML techniques.

Rosaria: How can I adapt the model then to the new world?

Scott: With KNIME software you have options for model monitoring, so that, for example if you see a model dropping below an accuracy threshold you’ve set, you could trigger automated retraining to try to improve performance. You can also set up a champion/challenger paradigm, using different algorithms or hyperparameters, to test a variety of models against your current best performer to see if it can be beaten. Sometimes, no amount of automated retraining will improve performance sufficiently, so you can also set up alerts to let your data scientist know when manual intervention is needed. All of this is done the usual KNIME way - with workflows and components!

Conclusions

Rosaria: A few more questions to conclude. Do you have any advice for beginners who are now starting to learn about text mining?

Scott: To start, try to get a good grasp on the terminology. Even within the wider world of data science, text mining has its own specific idiosyncrasies. Also, spend some time to get as familiar as you can with the common pre-processing steps in a text mining process, since you will need to be implementing these over and over again. Perhaps begin with a simple sentiment analysis use case before moving on to other more complex projects.

Dursun: Text mining has its own set of terms that may sound like foreign language to a beginner, and hence, a reading of the foundational concepts and theories is needed. Then, since as the saying goes “nothing can replace hands-on experience,” one should start investigating the existing models and then build his/her own models. KNIME Analytics Platform is a great tool to quickly learn the process, test out workflow concepts, and apply them to your own data.

Rosaria: Any forecasted updates for the KNIME Textprocessing Extension?

Scott: We have a few things in the works, but we’ll keep the specifics of those under wraps until they’re ready for release - or at least ready for the next KNIME Labs extension update! But in the meantime we are very interested to hear from users of KNIME about what’s working for you and what isn’t in the Textprocessing extension - what are your pain points? What features do you find yourself wishing are available? Direct feedback from our users helps us prioritize future development, so come tell us your thoughts in the KNIME Forum. And who knows - you may find your username in a set of upcoming patch notes if we incorporate your suggestion!

Rosaria: Last question. What's the process to go about getting KNIME beginner certification?

Rosaria: This is the perfect question to end this panel discussion. The next certification exams take place on May 18. To prepare for the certification exams, you can either take an instructor-led course or one of our e-learning self-paced courses. The content is the same. The difference is just in the instructor looking over your shoulders or not.

And with this we conclude this panel discussion. Thanks to Dursun and Scott for the time and the answers.

This is a revised version of the original article that appeared on Medium.

-------------------

Some of the questions and answers reported in this interview originate from the webinar “Text Mining - Panel Discussion” run by KNIME on Sep 14, 2020.