AI providers are racing to develop the most advanced reasoning models, with companies like OpenAI and DeepSeek in competition to release models that excel in complex problem-solving, logical inference, and multi-step planning tasks.

OpenAI’s o1 and DeepSeek’s R1 are currently two of the most advanced examples of reasoning LLMs and there’s a heated debate among data practitioners about which one is best.

We built a KNIME workflow to help answer that question!

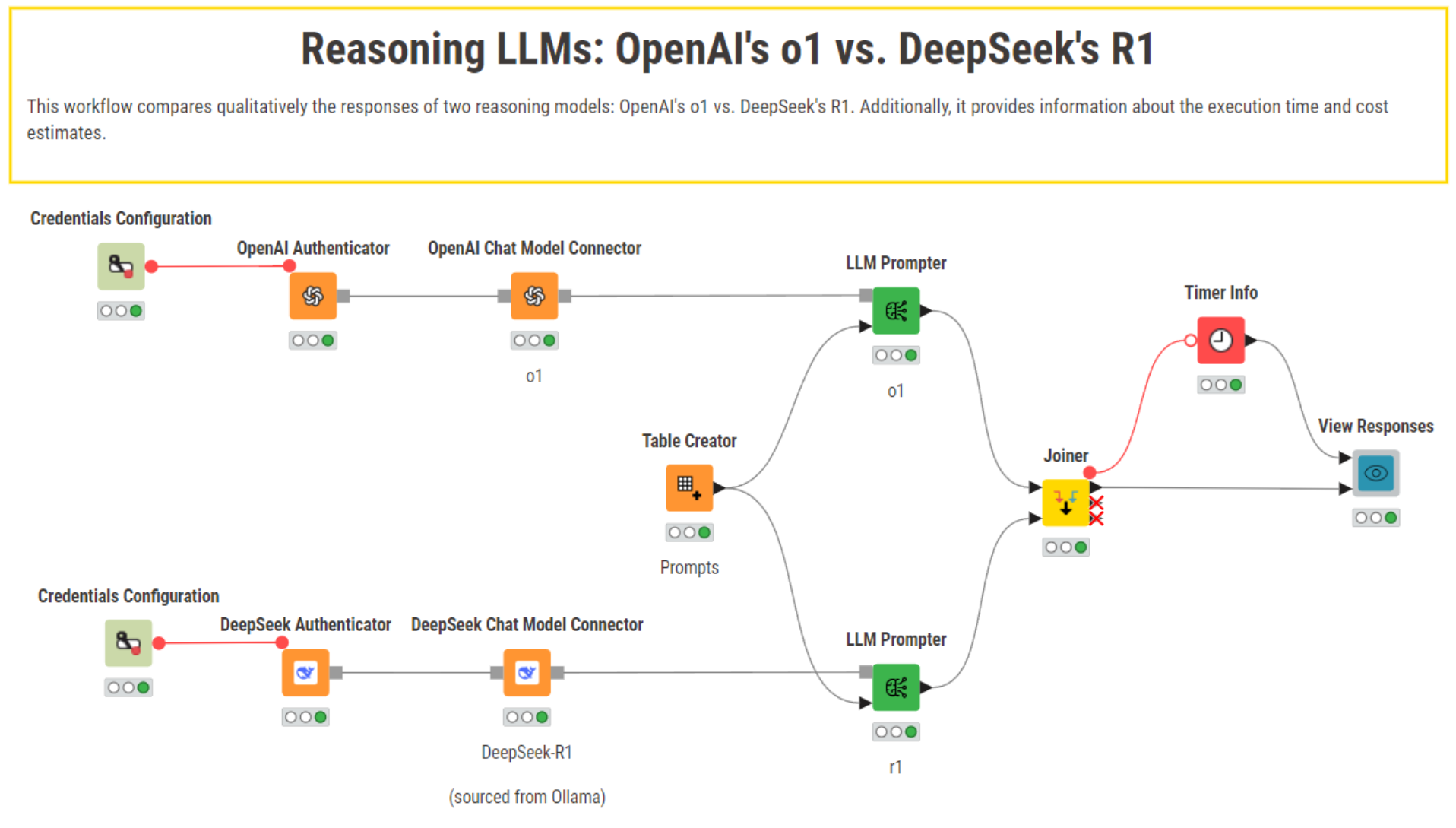

The workflow compares the response of OpenAI’s o1 vs. DeepSeek-R1 to the same reasoning tasks against three criteria: Response quality, cost & execution time, and transparency.

The comparison is mostly qualitative, with an eye on costs and execution time.

To connect to OpenAI’s o1 and DeepSeek’s R-1 in KNIME, we follow the usual authenticate - connect - prompt approach, selecting the models from the OpenAI Chat Model Connector and the DeepSeek Chat Model Connector nodes, respectively.

Let’s set the stage for the experiment:

- Model contestants: OpenAI’s o1 vs. DeepSeek’s R1.

- Consumption mode: o1’s via OpenAI’s API vs. DeepSeek’s R1 executed locally (7B-parameter model sourced from Ollama)**.

- The task: Three different prompts to the test the models’ planning and logic skills:

- “Explain step-by-by how you would organize a relocation from Hamburg to Berlin.”

- “You are a financial analyst. Create a step-by-step plan to determine if a company is financially stable based on revenue, expenses, and debt.”

- “A train travels 60 km in 1 hour, 120 km in 2 hours. How far does it travel in 3 hours? The speed remains constant.”

- Model hyperparameters: Temperature = 0, and the max tokens = 4000.

** At the time of writing, DeepSeek has temporarily suspended API service recharges, effectively hindering the usage of the API service.

You can download the KNIME workflow that comapres OpenAI's o1 with DeepSeek-R1 for free from the KNIME Community Hub.

OpenAI vs. DeepSeek: A comparison based on quality, cost, and transparency

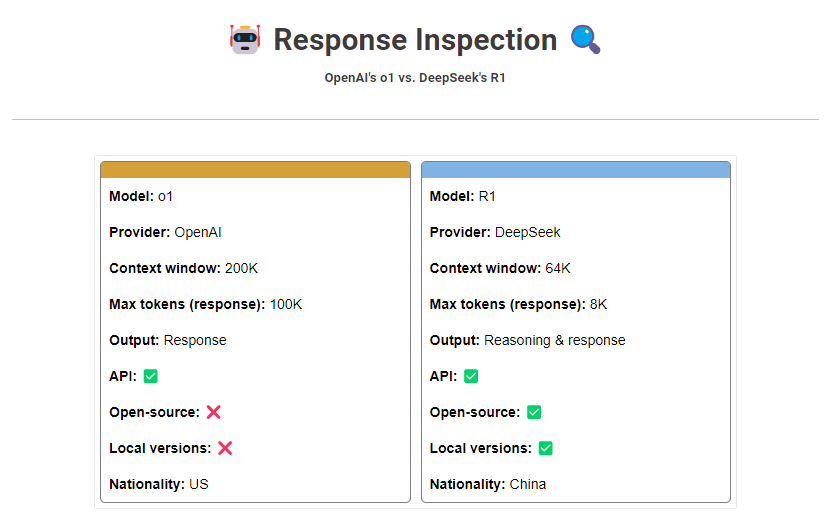

To make it easier to compare the models’ responses, our KNIME workflow features an interactive dashboard.

The dashboard shows each model’s technical characteristics, the responses, and a breakdown of costs and average execution time. Keep in mind that “open-source” in the view below specifically refers to the fitted model and its use – and not its training implementation or data.

Let’s take a look at how the models compare with each other on the specific tasks.

Quality of the responses

Both models deliver comprehensive, meaningful, and informative responses, presenting the output as a series of steps when explicitly requested in the prompt.

However, we can also observe relevant differences in the level of accuracy and details, highlighting the underlying differences in the models’ parameter size and acquired knowledge.

OpenAI has not publicly disclosed the exact number of parameters in the o1 model; for context, previous models like GPT-3 had 175 billion parameters.

In our experiment, to streamline local execution, we picked a version of DeepSeek’s R1 that has only 7 billion parameters.

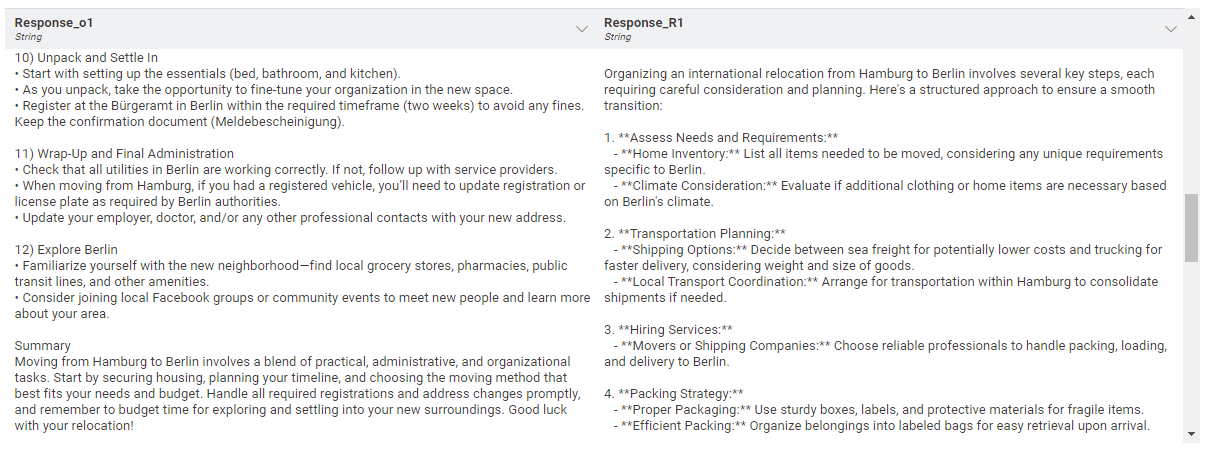

1st Task: Moving from Hamburg to Berlin

We asked both models to plan and organize how to relocate from Hamburg to Berlin:

“Explain step-by-by how you would organize a relocation from Hamburg to Berlin.”

This task tests the models’ ability to plan and organize an event that requires general understanding of the world, with the option to include country-specific directions.

OpenAI

OpenAI’s o1 excels in providing accurate and region-specific insights, particularly for Germany.

For example, it:

- Recommends checking the local Bürgeramt (city administration) guidelines, reminds us to complete the Anmeldung (registration) and suggests contingency plans for unexpected scenarios.

- It returns very practical tips to ensure a smooth relocation process, advising, for example, to pack an “essential box” and conduct final checks of the old home in Hamburg before handing over the keys.

OpenAI’s o1 structures responses clearly, using numbered steps and bullet points for action items. It concludes the response with a short summary.

DeepSeek

DeepSeek’s R1 produces short and meaningful responses but lacks country-specific details, making its answers feel more generic.

Interestingly, the model’s response focuses on aspects that are not covered in o1’s response, such as visa application, mental and emotional preparation, and career goals in the new location.

The output lacks a final summary but is well-structured and features a similar formatting to o1.

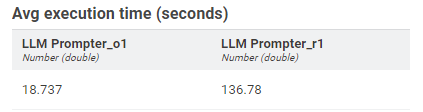

2nd Task: Financial auditing plan

We asked each model to draw up a financial auditing plan:

“You are a financial analyst. Create a step-by-step plan to determine if a company is financially stable based on revenue, expenses, and debt.”

This task requires domain-specific knowledge and understanding of accounting indicators that are relevant to evaluate a company’s financial stability.

OpenAI

OpenAI’s o1 once again provides a very rich and detailed response, listing not only the steps to a successful analysis but also mentioning specific accounting indicators (e.g., EBIT, EBITDA), liquidity ratio formulas, and measures to monitor working capital management (e.g., DSO, DIO).

The response also includes a final summary and is structured in a clear way.

DeepSeek

DeepSeek’s R1 provides clear and precise instructions, but they are notably less detailed.

The response lacks explicit references to accounting and financial indicators, suggesting it is tailored for users who already have expertise in the subject and merely need a checklist.

The output’s structure clearly indicates each step. The model does not deliver a final summary.

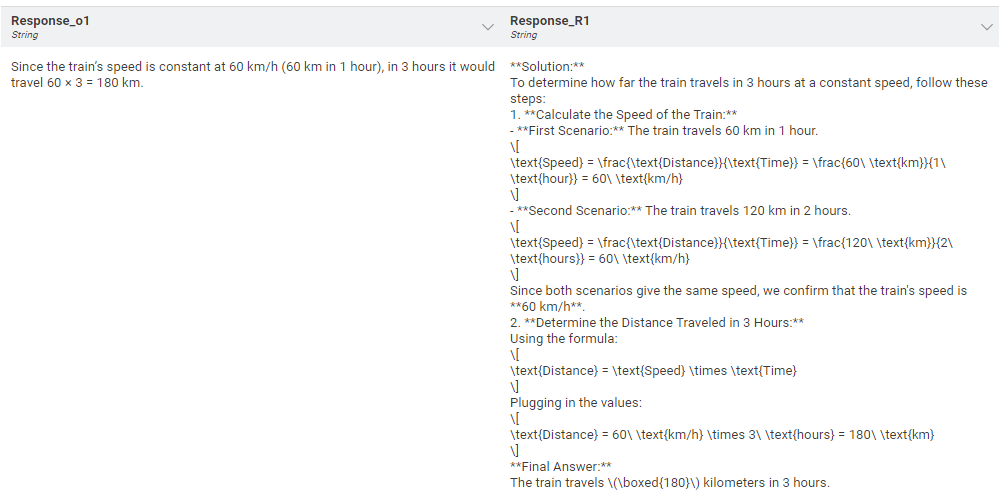

3rd Task: Simple mathematical problem

We asked both models to solve a simple math problem, which targets the distinctive ability of reasoning models compared to chat models:

“A train travels 60 km in 1 hour, 120 km in 2 hours. How far does it travel in 3 hours? The speed remains constant.”

OpenAI

o1 outputs a correct and straightforward response, highlighting the importance of the train’s constant speed to determine the result. The model also shows the computation clearly.

DeepSeek

DeepSeek’s R1 provides a correct but rather verbose answer. It first verifies the assumption of constant train speed provided in the prompt. While this can be valuable for more complex logical problems, it feels unnecessary for a simple computation. The model then presents the answer in LaTeX mathematical notation, which may hinder understanding for users with limited technical background.

Cost & execution time

OpenAI

OpenAI’s o1 is a proprietary model consumable via the dedicated API and priced at $60.00 per 1M token output, making it a relatively expensive option, especially for high-volume or continuous usage.

DeepSeek

DeepSeek’s R1, on the other hand, can be leveraged for free in its local and open-source versions.

For large-volume queries, the model can be consumed programmatically via the API for $2.19 per 1M token output, offering a significantly more cost-effective alternative and potentially lowering the barrier to AI adoption for SME and emerging economies.

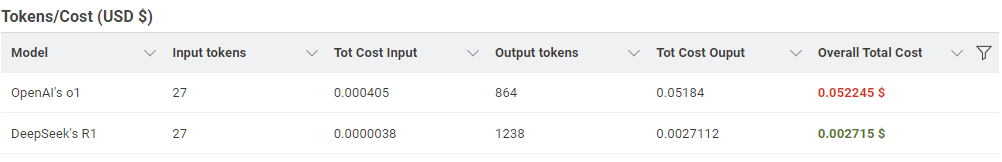

Approximate cost of querying both models

For our experiment, we estimated the approximate cost of querying both models via their corresponding APIs. To do that, we:

- Tokenized each pair of prompt-responses using Stanford’s PTBTokenizer in the Strings to Document node, and extracted the number of input/output terms with the Document Data Extractor node.

- Multiplied the token number by the per-token input and output cost based on OpenAI and DeepSeek's API pricing, and summed them up.

For simplicity, it’s worth noticing that we didn’t account for cached inputs, which may reduce the actual cost.

Taking as an example the prompt-response pair used in the second task to put together a financial auditing plan, we can see that for a prompt of roughly 27 tokens, OpenAI’s o1 returned a response of 864 tokens vs. 1238 of DeepSeek’s R-1.

Despite the larger total number of required tokens, using DeepSeek’s API is approximately 180% cheaper than OpenAI’s.

Approximate execution time in both models

In terms of execution time, leveraging a model via the API is expected to be significantly faster than executing the model locally on a personal computer**, as this heavily depends on the available hardware for processing speed.

In our experiment, o1’s average execution time via OpenAI API was almost 152% faster than executing DeepSeek’s R1 locally.

It will be interesting to repeat the same experiment using DeepSeek API, once it’s fully operational again.

** We ran the experiment on a Lenovo ThinkPad P14s Gen 4 with a processor AMD Ryzen 7 PRO 7840U w/ Radeon 780M.

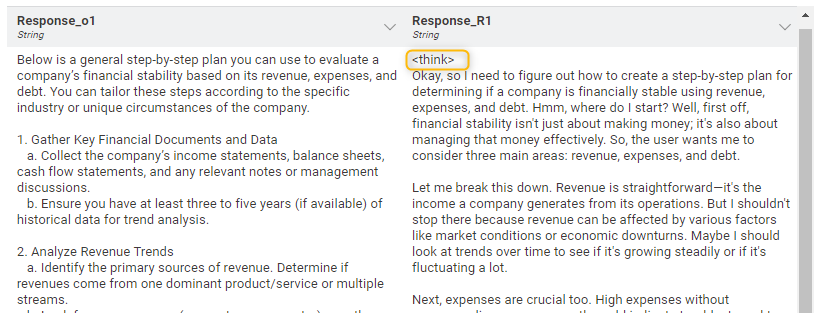

Transparency

As LLMs with billions of parameters and a complex architecture, both models effectively behave as black-boxes, making it very difficult to interpret or explain their decision-making processes.

Yet, the nature of o1 (closed-source) vs. R1 (open-source) implies that their providers could opt for different approaches when it comes to disclosing the models’ reasoning process, parameter number, architecture, training and finetuning procedures.

OpenAI

OpenAI’s o1 currently does not provide any information about its underlying reasoning process, limiting transparency and making it difficult to decipher how conclusions are reached.

At the same time, there are no official publications released by researchers at OpenAI detailing the work behind o1. The sole exception is the publication of the model performance on different evaluation benchmarks.

DeepSeek

Researchers at DeepSeek have shared the details of their work in an open paper.

R1 articulates its reasoning, providing insight into the thought process behind its responses. In the model output, the reasoning process is typically delimited by an opening and closing <think> token. This capability currently sets DeepSeek-R1 apart from other advanced AI models, offering greater transparency, which can be valuable for driving trust in AI decision-making.

More considerations: LLM vulnerabilities, capabilities & reputational concerns

In addition to the responses shown above, a more thorough comparison should also take into account other aspects, ranging from the technical capabilities offered by the AI providers to model vulnerabilities and data privacy risks.

For example, we could automatically scan the LLMs to evaluate key risks and vulnerability, such as the generation of hallucinations, biases or harmful content, the perpetuation of stereotypes, the disclosure of sensitive information or the injection of prompts using, for example, the Giskard evaluation framework.

From a tech and capability standpoint, the comparison could be expanded to encompass DeepSeek and OpenAI’s ability to address different users’ needs with their model offering and services. For example:

- Are OpenAI and DeepSeek supporting models capable of generating embeddings or models with multimodal capabilities for the generation of images, audios or videos?

- Do both providers allow the creation of and interaction with customized GPTs on the browser?

- Are their API services equally stable and reliable for deployment? Do they offer pricing tiers for different business needs? Or a fine-tuning API for easy customization?

Lastly, when comparing OpenAI vs. DeepSeek, we should also consider legal and reputational concerns, both raising important questions about data sourcing transparency, content moderation, suppression, and alignment with bias or government censorship policies.

Your use case, your model choice—with KNIME at the helm

Our simple experiment showed that OpenAI’s o1 provides responses that are more comprehensive, compelling, and at a fraction of the execution time than DeepSeek’s R-1. For large volumes of queries and continuous usage, though, the costs of using OpenAI’s o1 can rise quickly.

At the same time, due to DeepSeek’s current API restrictions, we could only test R1 locally and as a less proficient version. R1’s responses, while more verbose and less detailed, offer a glimpse into the model’s reasoning process. Additionally, R1 consumption via the API holds the promise of a very affordable alternative (or plain free for local execution) for businesses who wish to leverage reasoning models in their data pipelines.

KNIME provides you with the ability to adapt to evolving technologies and connect to the AI of your choice, whether that’s OpenAI, DeepSeek, Hugging Face, or local open-source models.

Download KNIME to unlock new opportunities with the latest advancements in AI.