Welcome to the final episode of our series of articles about Guided Labeling! Today: Can active learning and weak supervision be combined?

During this series we have discussed and compared different labeling techniques - active learning and weak supervision. We have also examined various sampling strategies, for example via exploration/exploitation approaches and random strategies.

The question we want to answer was:

- Which technique should we use - active learning or weak supervision - to train a supervised machine learning model from unlabeled data?

Considerations:

- It depends on the availability of domain experts and weak label sources. The harder it is to learn a decent decision boundary for your classification problem the more labels you're likely to need - particularly when your model is also complex (e.g. deep learning). This makes manual labeling less and less feasible, even when using the active learning technique. So unless you have years of patience and a large budget, weak supervision could be the only way.

- Setting up labeling functions or weak label sources can also be quite tricky, especially if your data does not offer an easy way to quickly label large subsets of samples with simple rules.

- In most cases it makes sense to keep the domain expert in control after the labeling functions have been provided. After training, the issues can subsequently be located, the labeling function improved, and the weak supervision model retrained in an iterative process.

So: Checking feasibility before applying active learning or weak supervision is great, but why do we need to select one over the other?

- Why not use both techniques?

Guided Labeling: Mixing and Matching the Two Techniques

Active learning and weak supervision are not mutually exclusive. Active learning is all about the human-in-the-Loop approach and the sampling inside of it that is needed to select more training rows. Weak supervision, on the other hand, is a linear model training process, requiring multiple inputs of somewhat flexible quality. There is actually a number of ways to combine the two techniques together efficiently. Ultimately, all these different ways of mixing and matching the two results can be implemented in Guided Analytics applications and made available to the domain experts via a simple web browser. For this reason we called the overall approach Guided Labeling.

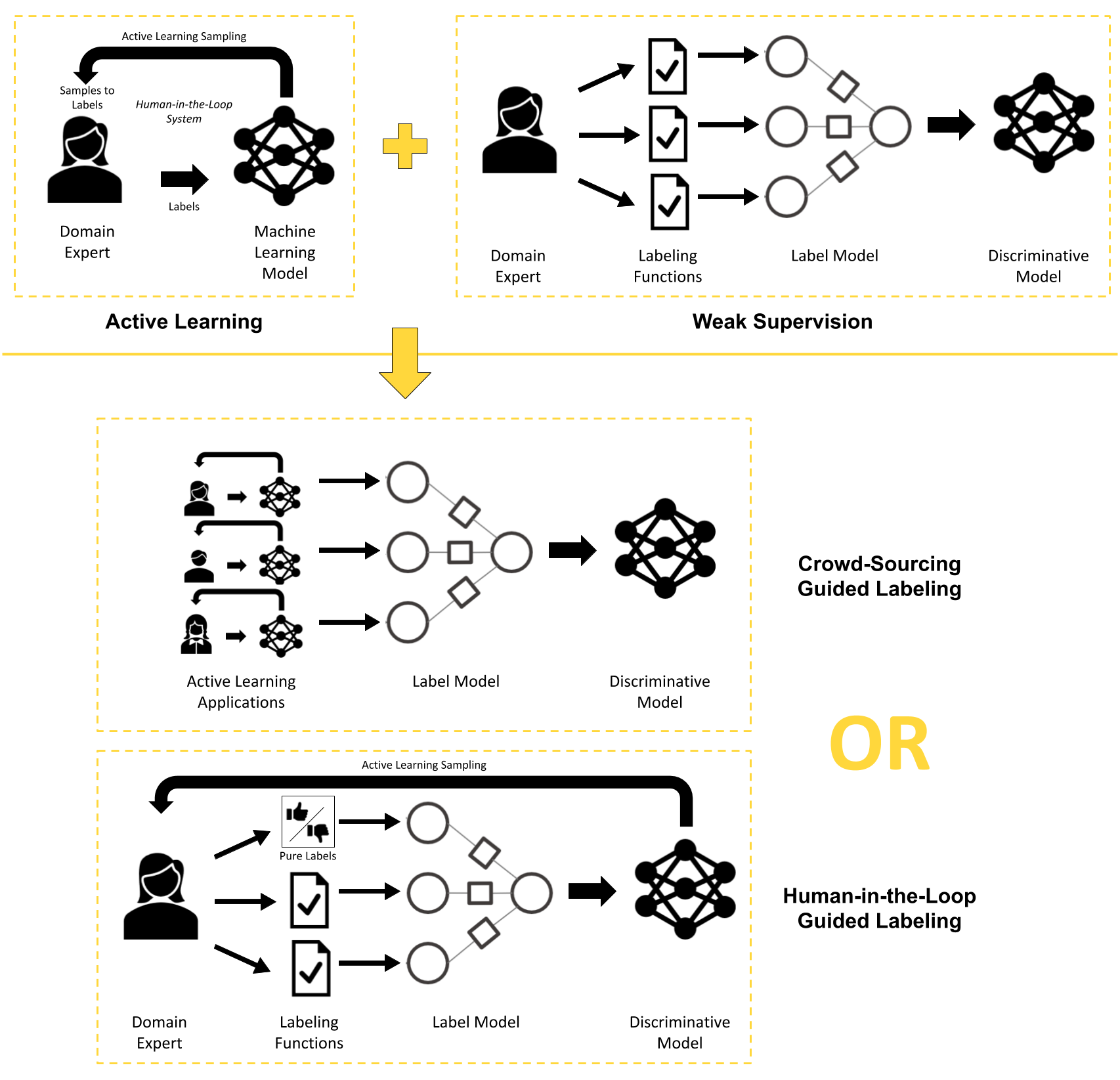

We will explore two Guided Labeling examples (Fig. 1) which cover the most obvious scenarios where active learning and weak supervision can be combined.

- The first example: Crowd-Sourcing Guided Labeling”

- The second example: “Human-in-the-Loop Guided Labeling”.

Figure 1: In the figure we summarize the Guided Labeling approach examples that are generated when combining Active Learning with Weak Supervision: “Crowd-Sourcing Guided Labeling” and “Human-in-the-Loop Guided Labeling”.

Crowd-sourcing guided labeling: humans as weak label sources

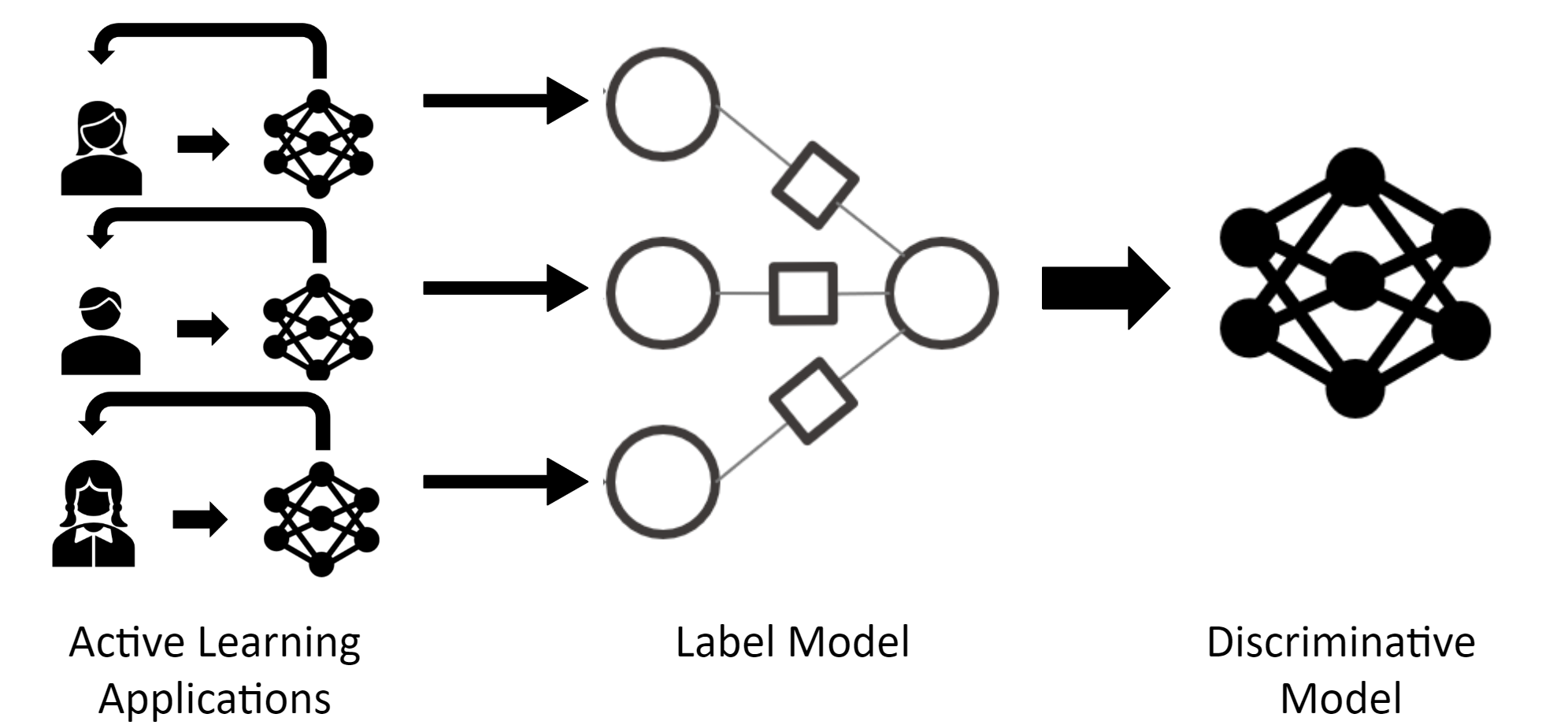

Sometimes, manual labeling applied by a human is the only possible and feasible label source. In these cases it’s also likely that the labeling task will be quite difficult and that humans will make mistakes. This scenario is especially true when labels are crowd-sourced. What we mean here is when a generic user is asked to apply labels rather than a trusted in-house domain expert. In these cases you could consider human labeling a weak label source. More than one active learning application could be deployed simultaneously to a number of users, i.e. to domain experts. The domain experts would label more and more samples using active learning sampling. All provided labels are inserted into the same database queried by another system aggregating them in a weak label sources matrix. This other system can use the matrix and train the final model with weak supervision (Fig. 2).

Figure 2: Crowd-Sourcing Guided Labeling example showing how all labels from many different active learning applications can be aggregated in a single weak supervision model. This is extremely useful when the domain expert labeling work could be inaccurate and should be automatically compared to the work of another domain expert before providing it to the model.

Human-in-the-loop guided labeling: single interactive web based application

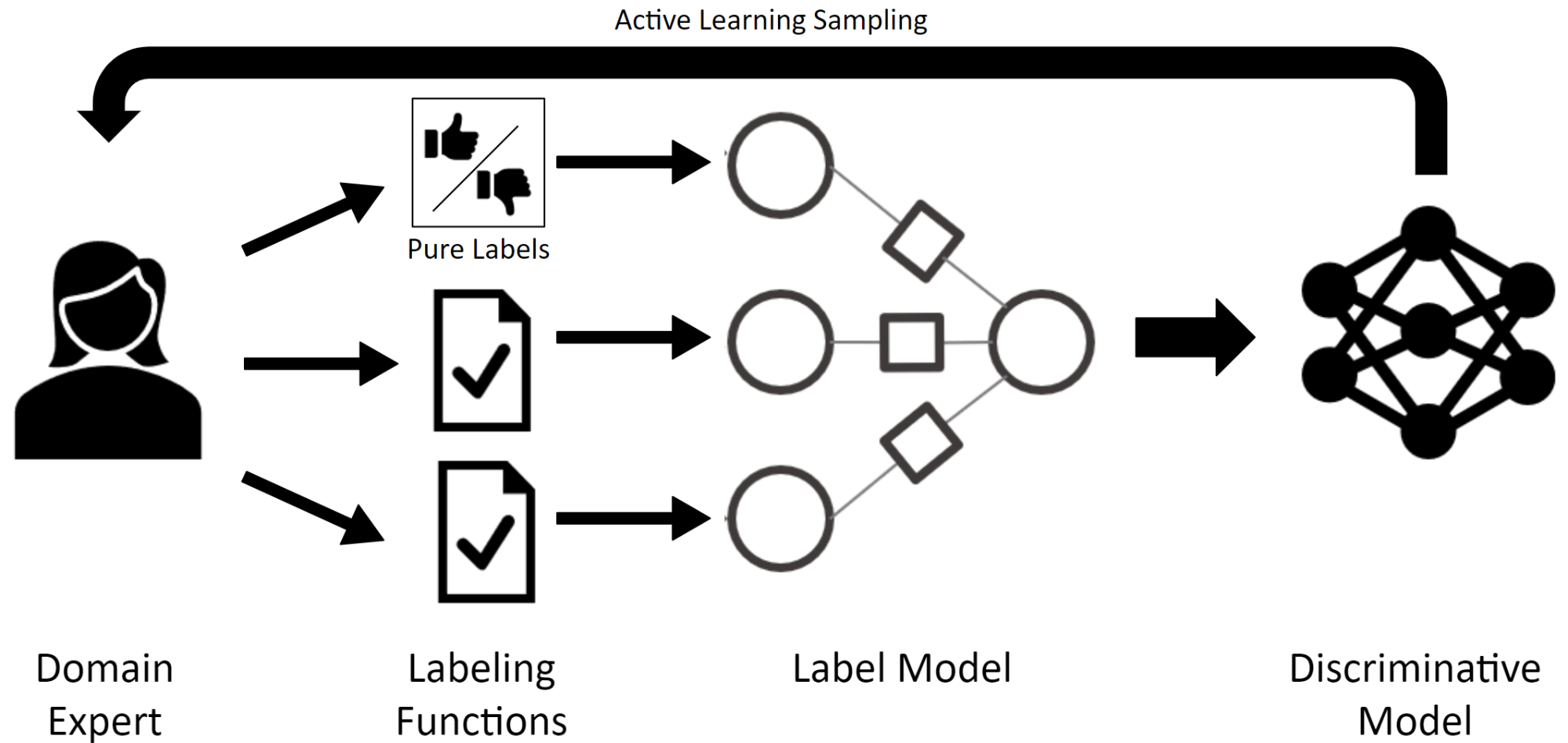

We have already seen how a domain expert can use labeling functions to provide labels. In this second Guided Labeling example we consider the case where the domain expert wants to:

- Provide labeling functions

- Train a model via weak supervision

- Inspect the predictions

- Edit the labeling functions

- Manually apply labels where it’s critical

- Retrain the model

- Repeat from step 3

This user journey is clearly a human-in-the-loop application, as the user is repeating tasks alternating her operation with the system. This setup offers two great opportunities to train a supervised model from an unlabeled dataset:

- Huge quantities of labels for the evident samples via labeling functions;

- Good quality of labels for the crucial samples via manual labeling.

Achieving both of the above is possible by combining weak supervision for the labeling functions and active learning for manual labeling. The manual labeling can be digested by the weak supervision training by simply considering it as another weak label source (Fig. 3).

Figure 3: Human-in-the-loop Guided Labeling example showing how the domain expert can provide labeling functions and manual labeling in a single human-in-the-loop application where the weak supervision model is trained each time the labeling functions are improved or more pure labels are provided where needed using active learning sampling.

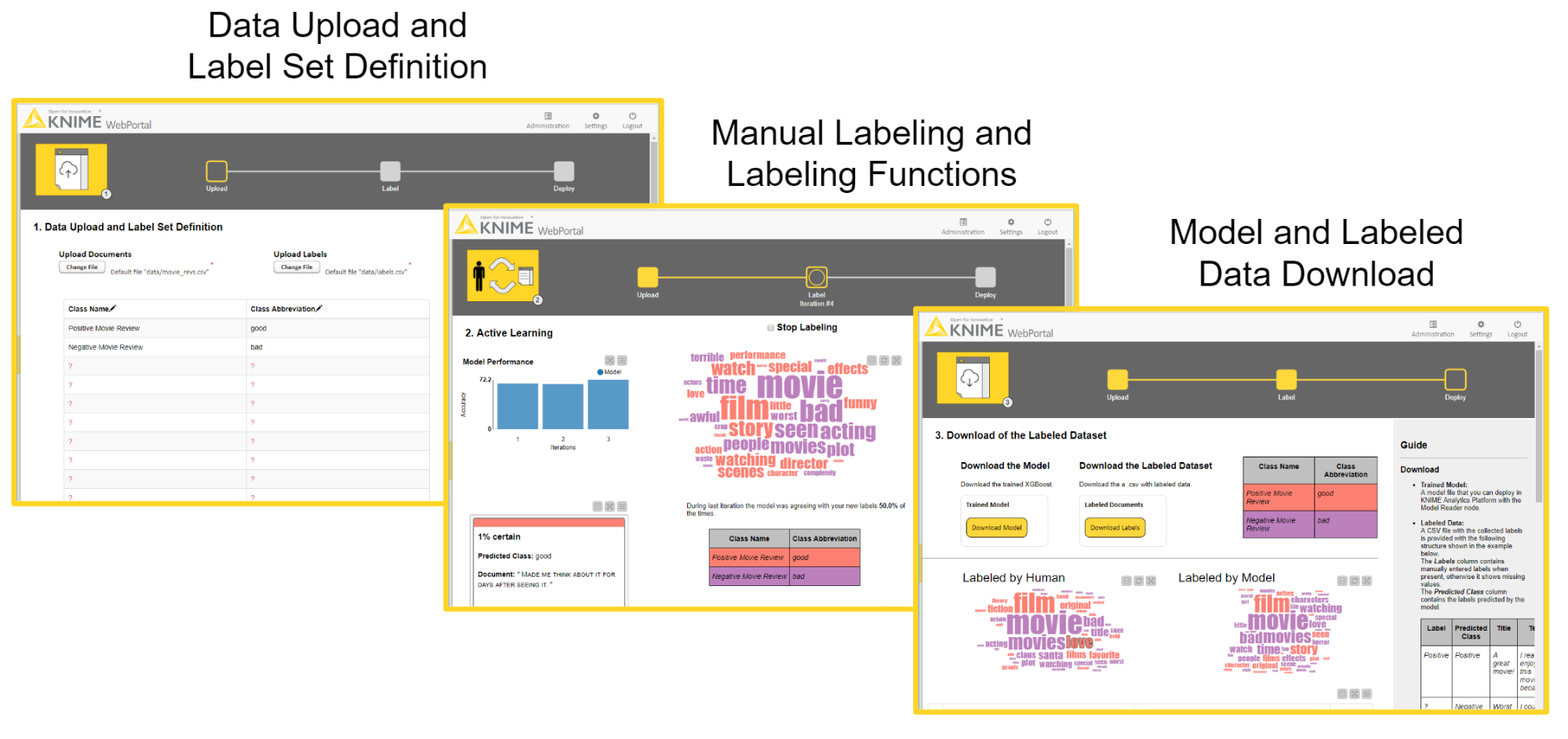

Human-in-the-loop guided labeling for document classification

Via Guided Analytics we developed an interactive web based application which covers the human-in-the-loop Guided Labeling example. The domain expert can interact with a sequence of interactive view (Fig. 4) to:

- Upload documents

- Define the possible labels

- Provide initial labeling functions (e.g. “if [string] is in document apply [label]”)

- Train an initial weak supervision model

- Provide manual labels from Exploration-vs-Exploitation active learning sampling

- Edit labeling functions

- Repeat from Step 4 or end the application.

Figure 4: The Guided Analytics application is made of a sequence of interactive views available via the KNIME WebPortal. The domain expert becomes part of the human-in-the-loop with Guided Labeling without being overwhelmed by the complexity of the KNIME workflow behind it.

- An example of this kind of an application is available on the KNIME Hub as a workflow.

Download the free blueprint and customize it without any coding using KNIME Analytics Platform. To make the application accessible to your domain expert, you have to deploy it to KNIME Server. Once it is on KNIME Server, the application is available on any web browser via the KNIME Webportal.

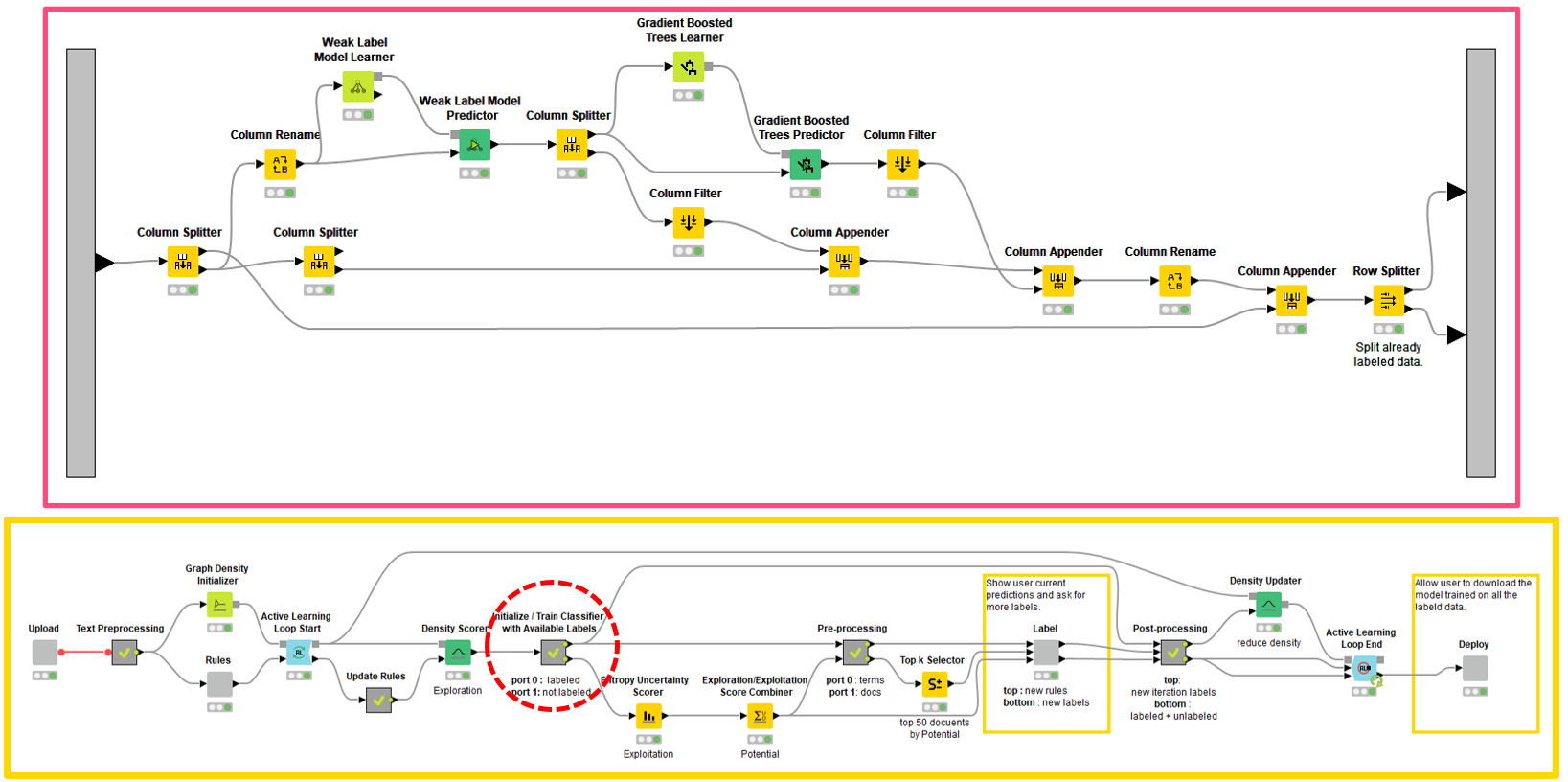

Figure 5: The workflow available on the KNIME Hub. In the yellow box, you can see the entire workflow consisting of components, the active learning loop and the Exploration/Exploitation score combiner. The part of the workflow framed in red shows the inside of the metanode (circled by a red-dotted line) where weak supervision is trained.

The workflow behind the application (Fig. 5) includes:

- KNIME components implementing each of the views

- Weak Label Learner and Gradient Boosted Tree Learner for the Weak Supervision training

- Active Learning Loop to repeat the training process

- A Labeling View

- Tag Clouds make the experience more interactive showing document frequent terms and

- Network Viewers show the correlation among labeling functions are made available to the user for inspection (Fig. 6).

Figure 6 : An animation showing the kind of interactivity available while running the Guided Labeling For Document Classification for sentiment label (“good” vs “bad”) of IMDb movie reviews via the KNIME WebPortal. Labeling with buttons, browsing documents via tag clouds, editing labeling functions and inspecting their correlations via a network view. This setup works well with document classification but many other visualizations can be combined depending on your needs.

We have reached the end of the Guided Labeling KNIME Blog Series, but you can still find more content about this topic.

Resources

- Watch our webinar on YouTube, for example, to see these workflows used in a live demo.

- Find more Guided Labeling, Active Learning and Weak Supervision examples on the KNIME Hub.

What’s next?

There are other strategies that could be added to the Guided Labeling approach (semi-supervised learning, transfer learning, more active learning strategies, more use cases, ..).

If you have a proposal feel free to let us know via this KNIME Forum thread!

The Guided Labeling KNIME Blog Series

By Paolo Tamagnini and Adrian Nembach (KNIME)

- Episode 1: An Introduction to Active Learning

- Episode 2: Label Density

- Episode 3: Model Uncertainty

- Episode 4: From Exploration to Exploitation

- Episode 5: Blending Knowledge with Weak Supervision

- Episode 6: Comparing Active Learning with Weak Supervision

- Episode 7: Weak Supervision Deployed via Guided Analytics

- Episode 8: Combining Active Learning with Weak Supervision